- Log file analysis reveals exactly how search engines crawl your site.

- Use it to identify crawl waste, missed pages, and technical errors.

- Regular analysis improves crawl efficiency and indexing speed.

- Combine log data with other SEO tools for deeper insights.

Log file analysis for SEO might sound technical, but it is one of the most revealing ways to understand how search engines interact with your website.

Every time a search engine bot visits your site, it leaves a trail in your server logs. By reading and analyzing those logs, you can see exactly which pages get crawled, how often, and whether there are any issues preventing your most important content from being discovered.

Crawl budget is not unlimited.

According to Google, a site’s crawl budget depends on factors like crawl demand and crawl capacity, which means wasted crawls on irrelevant or duplicate pages can slow down indexing for your valuable content.

A Botify study found that on average, 51% of a site’s crawls go to non-SEO relevant pages, leaving huge potential for improvement.

When you understand your log file data, you can take targeted action to optimize crawl efficiency.

That means guiding Googlebot to the right pages, reducing wasted resources, and improving your chances of ranking.

This guide walks you through what log file analysis is, why it matters for SEO, and how to do it effectively, even if you’re not a developer.

The SEO Edge Your Competitors Don’t Have

Advanced log analysis reveals ranking opportunities before they do.

Contact UsWhat Is Log File Analysis in SEO?

A log file is a record kept by your web server that logs every single request made to your site. Each entry contains details like the time of the request, the IP address, the URL accessed, the HTTP status code, and the user agent making the request.

Think of it as a diary your server keeps about every visitor, whether it’s a human user or a search engine bot.

Log file analysis is the process of reviewing and interpreting that raw server data to understand how search engines crawl your website.

By filtering entries for search engine bots like Googlebot or Bingbot, you can see exactly which pages they visit, how often they come back, and whether they encounter any errors.

In SEO, this matters because what gets crawled can influence what gets indexed. If search engines spend too much time on low-value URLs or encounter repeated errors, your important pages might be delayed in appearing in search results.

Log file analysis lets you catch those issues early and take action.

In simple terms, it’s like getting a behind-the-scenes look at your site’s relationship with search engines. Instead of guessing what Googlebot sees, you can know for sure.

Featured Article: How Product Schema Markup Benefits E-commerce Websites

Why Log File Analysis Matters for SEO

Search engines work on a crawl-and-index system. They discover pages through links, sitemaps, and other signals, then decide which pages to crawl and how often.

If your site is large or has complex architecture, some pages might never get visited by search engine bots, meaning they’ll never have a chance to rank.

Log file analysis gives you a clear picture of how your crawl budget is being spent. Crawl budget is the number of URLs search engines will crawl on your site within a certain time frame.

Google notes that while most small sites don’t need to worry about it, larger or rapidly changing sites can benefit from managing crawl efficiency.

Wasted crawls are common. A Botify report found that only 49% of a site’s crawled URLs tend to be SEO-relevant pages, while the rest are often non-canonical, filtered, or duplicate content.

That means search engines could be spending half their time on pages that don’t bring you any organic traffic.

By running regular log file analyses, you can:

- Identify high-value pages that aren’t getting crawled enough

- Detect crawl waste on irrelevant or low-priority URLs

- Spot server errors or redirect chains that waste crawl budget

- Understand seasonal or campaign-related crawl patterns

In short, it’s like giving your SEO strategy an X-ray. You can see exactly what’s working, what’s being ignored, and what’s slowing you down, and then fix it before it affects rankings.

Spot SEO Issues Before They Snowball

Proactive log file analysis keeps your site search-optimized year-round.

Contact UsKey SEO Insights You Can Get from Log File Analysis

Log file analysis is more than just checking whether Googlebot visits your site. When you dig into the data, you uncover patterns and opportunities that aren’t visible through tools like Google Search Console alone.

Here are some of the most valuable insights you can extract:

- Which pages get crawled most oftenLog data shows you exactly which URLs search engines prioritize. If low-value pages dominate the list, it’s a signal that you need to adjust your internal linking, sitemaps, or robots.txt rules.

- Crawl frequency by different botsYou can see how often Googlebot, Bingbot, and other crawlers visit your site. This helps you understand how search engines value your content and whether your site’s updates are being picked up quickly.

- HTTP status codes and errorsLogs capture every server response code. If you notice repeated 404 errors or 5xx server issues, it means bots are wasting requests on broken or inaccessible pages.

- Crawl depthYou can find out how deep into your site’s structure bots are going. If important pages are buried several clicks away from the homepage, they might be crawled less frequently.

- Duplicate content detectionWhen multiple URLs with similar content get crawled, it can dilute crawl efficiency. Logs help you identify these patterns so you can consolidate pages or use canonical tags.

- Bot traps and infinite loopsSome site structures accidentally create endless URL variations, like calendar pages or faceted navigation filters. Logs can reveal if bots are stuck crawling these areas.

When you combine these insights with other analytics and SEO data, you get a much clearer roadmap for improving crawl efficiency and boosting rankings.

Featured Article: How to Identify, Fix, and Prevent 404 Errors for Better SEO

How to Perform a Log File Analysis for SEO

Log file analysis might sound intimidating if you’ve never worked with server data, but the process is straightforward once you know the steps. Here’s how to approach it from start to finish.

-

Access Your Log Files

Log files are stored on your web server. If you’re using Apache or NGINX, they are usually located in a default log directory like /var/log/ or /usr/local/apache/logs/.

If you don’t have direct server access, your hosting provider, CDN (like Cloudflare), or development team can export the logs for you.

Keep in mind that log files can get large quickly, so you might only receive a certain date range unless you request otherwise.

-

Export and Prepare the Data

Log files typically come in .log or .txt format, but for analysis, you might want to convert them into .csv so you can work with them in spreadsheets or SEO tools. Keep raw backups before making any changes.

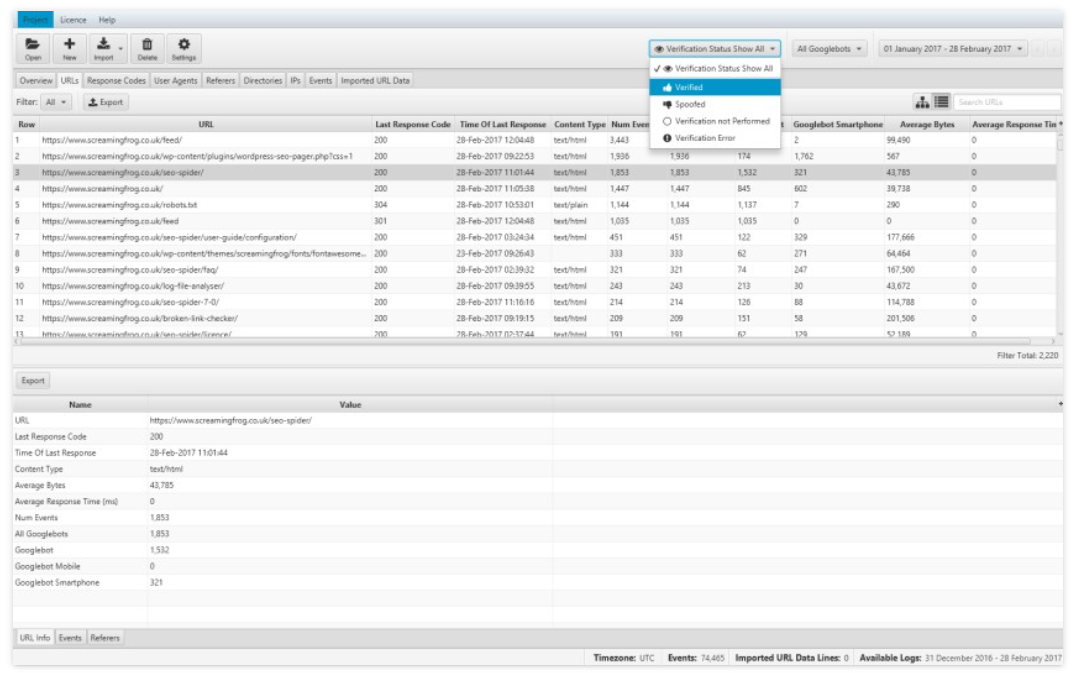

If you have a large site, manual analysis will be time-consuming. Tools like Screaming Frog Log File Analyzer, Botify, or Splunk can help parse the data and filter it for SEO purposes.

Screaming Frog Log File Analyzer -

Identify Search Engine Bot Activity

Filter your logs by user agent to isolate visits from search engine bots like Googlebot, Bingbot, and others.

Always verify the bots are genuine by checking their IP addresses against official search engine ranges. Fake bots are common and can distort your data.

-

Analyze Crawl Patterns

Look for trends in which pages are getting crawled most often, how frequently they are revisited, and whether bots are spending too much time on low-priority URLs.

Pay attention to crawl depth and seasonal traffic patterns.

-

Monitor Server Responses

Scan for high volumes of 4xx and 5xx status codes. Repeated errors waste crawl budget and can send negative quality signals to search engines. Redirect chains can also slow crawling and should be cleaned up.

-

Take SEO Action Based on Findings

Use what you discover to optimize your crawl budget. This might mean updating your XML sitemap to prioritize important pages, blocking low-value URLs in robots.txt, improving internal linking to deeper content, or fixing recurring errors.

Running a log file analysis once can give you a good snapshot of your site’s crawl health. Running it regularly can turn it into one of your most powerful ongoing SEO checks.

Featured Article: Local Link-Building Tips for Small Businesses

Common Mistakes to Avoid

Avoiding these mistakes ensures your log file analysis actually delivers SEO value rather than just generating interesting but unused data.

- Not separating bot and human trafficIf you skip filtering by user agent or verifying bot IPs, you risk mixing real visitor data with automated requests. This can lead to false conclusions about crawl frequency or page priorities.

- Overlooking partial or incomplete logsSometimes you’ll receive logs that only cover a few days or exclude certain server clusters. Without a complete dataset, you might miss important crawling patterns. Always confirm the time range and source of the logs before starting analysis.

- Misinterpreting HTTP status codesNot all 3xx redirects are bad, and not all 4xx errors are urgent. Misreading these codes can cause you to prioritize the wrong fixes. Learn the difference between temporary and permanent redirects, and between soft 404s and genuine not-found pages.

- Focusing only on errorsIt’s tempting to zero in on 404s and 500s, but crawl waste on low-value URLs can be just as harmful. Make sure you also review how much of your crawl budget is being spent on irrelevant pages.

- Failing to take action after analysisCollecting the data without acting on it defeats the purpose. Always connect your findings to clear next steps, such as updating sitemaps, improving internal linking, or adjusting robots.txt rules.

Recommended Tools for Log File Analysis

Choosing the right tool depends on your site size, budget, and technical comfort level. For many SEO teams, starting with Screaming Frog or Semrush is a quick win, while larger enterprises may benefit from Botify or custom setups like ELK Stack.

- Screaming Frog Log File AnalyzerA desktop application that imports raw log files and lets you filter by bots, status codes, and crawl frequency. It’s straightforward for beginners and works well for small to medium sites.

- BotifyBotify is an enterprise-level platform that combines log file analysis with advanced crawl and analytics data. It’s ideal for large websites that need in-depth reporting and integration with other SEO tools.

- Semrush Log File AnalyzerA web-based tool that processes logs quickly and provides clear visual reports on crawl activity, errors, and bot behavior. It’s useful for teams that want a mix of simplicity and actionable insights.

- SplunkSplunk is a powerful log management and analytics platform. While not built exclusively for SEO, it can handle massive datasets and is a good choice for technical teams comfortable with custom queries.

- ELK Stack (Elasticsearch, Logstash, Kibana)ELK Stack is an open-source solution for storing, processing, and visualizing log data. It offers flexibility and scalability, but requires technical expertise to set up and maintain.

Final Thoughts

Log file analysis offers a deeper SEO advantage than most standard tools because it shows exactly how search engines interact with your site.

Instead of relying on assumptions, you get clear, factual data on which pages are being crawled, how often, and whether there are issues slowing things down.

The value comes when you turn that data into action, such as refining your XML sitemap, blocking low-value URLs, or strengthening internal linking.

These steps help make crawls more efficient and improve the speed at which your most important pages get indexed.

By making log file analysis a regular part of your SEO process, you can catch technical issues early, allocate your crawl budget more effectively, and safeguard your rankings.

Starting with a small test and gradually building it into your ongoing strategy allows you to consistently uncover new opportunities and maintain a strong search presence over time.

Discover Missed SEO Opportunities

Find under-crawled pages and hidden ranking potential in your logs.

Contact Us