- SPAs rely on JavaScript, which makes crawling and indexing difficult without proper optimization.

- Use rendering strategies like SSR, pre-rendering, or dynamic rendering to make content visible to search engines.

- Follow best practices: clean URLs, optimized metadata, internal linking, and Core Web Vitals improvements.

- Track SEO performance with GA4, Google Tag Manager, and Google Search Console.

Single Page Applications (SPAs) have become a popular choice for modern web development. Platforms like Netflix, Airbnb, and Gmail rely on SPAs because they deliver faster, app-like experiences that keep users engaged.

In fact, a study by Google found that 53% of mobile users abandon a site if it takes longer than three seconds to load, which makes the speed and interactivity of SPAs appealing for both businesses and users.

The challenge is that while SPAs offer seamless user experiences, they often create obstacles for search engine optimization.

Traditional websites load a full HTML document for every new page, but SPAs load content dynamically using JavaScript.

Search engines can struggle to crawl and index this content correctly, leading to missed ranking opportunities.

If you rely on organic traffic, ignoring SPA SEO can limit your visibility.

The good news is that optimizing SPAs for search engines is possible with the right strategies. This guide explains how SPAs work, why they pose unique SEO challenges, and proven methods to make them crawlable and indexable.

By the end, you will have a clear roadmap on how to optimize SPAs (Single Page Applications) for SEO.

Make Your SPA Search-Friendly

Nexa Growth ensures your dynamic site is crawlable, indexable, and ready to rank.

Contact UsWhat Is a Single Page Application (SPA)?

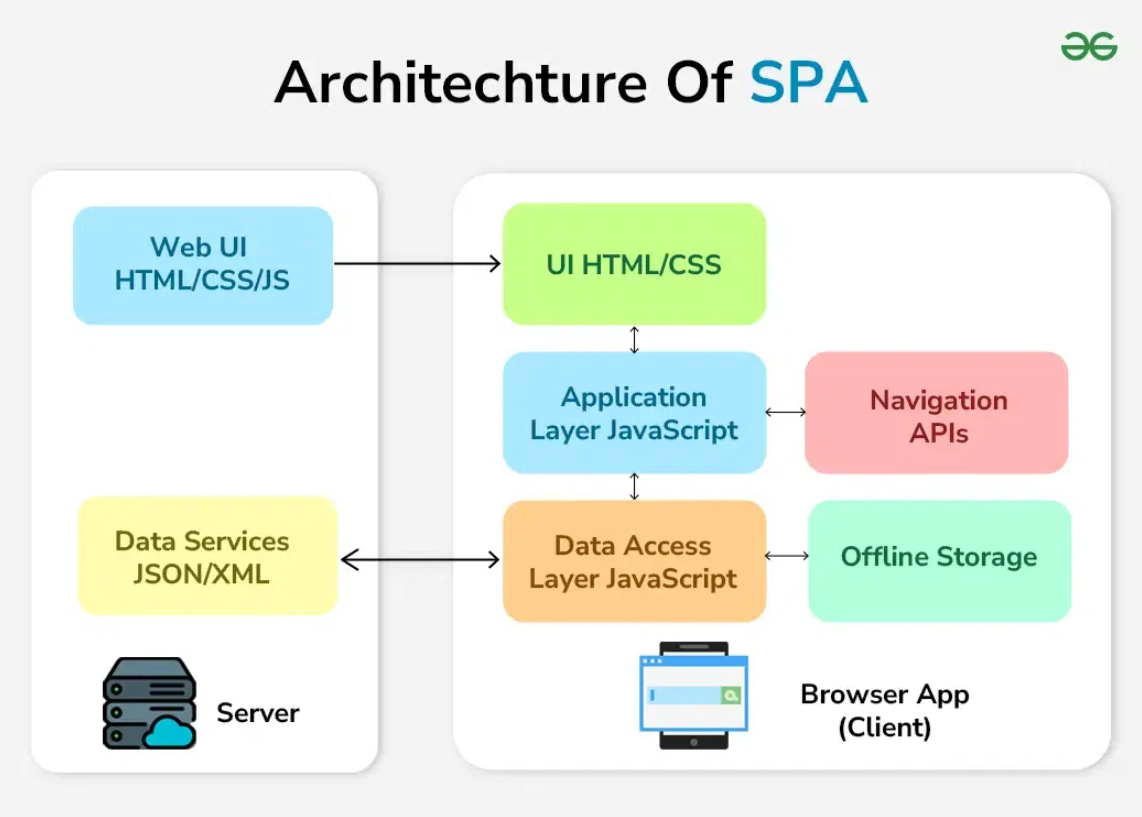

A Single Page Application is a type of web application that loads a single HTML page and dynamically updates its content as users interact with it.

Instead of reloading a new page every time someone clicks a link, an SPA fetches data in the background and injects it into the existing page using JavaScript.

This makes the experience smoother and faster, similar to using a mobile app.

Well-known examples include Gmail, Google Maps, Netflix, and Twitter. Each of these applications delivers content quickly and keeps users engaged without the interruption of full-page reloads.

According to a report by Statista, mobile apps and SPAs that mimic app-like behavior are projected to generate more than $613 billion in revenue by 2026.

This highlights why businesses are increasingly adopting SPAs as part of their digital strategy.

The main advantage of SPAs is speed. Since much of the code is loaded once at the start, interactions feel instant. This can reduce bounce rates and improve user satisfaction. However, the reliance on JavaScript comes with trade-offs. Unlike traditional multi-page websites, SPAs often present challenges for search engines that rely on HTML to crawl and index content.

In short, SPAs can provide outstanding user experiences, but they require careful planning to ensure they also perform well in search rankings.

Featured Article: What Is Technical SEO and Why Does It Matter?

Why SEO for SPAs Is Challenging

Single Page Applications offer speed and interactivity, but those same features can confuse search engines.

Unlike traditional websites that deliver full HTML documents for every page, SPAs rely heavily on JavaScript to render content.

This creates a few key SEO challenges that can impact how well your site appears in search results.

-

Crawling Issues

Search engines like Google rely on crawlers to discover and process content. With SPAs, most of the content is not in the initial HTML but loaded later through JavaScript.

Although Google has improved its ability to render JavaScript, it still does not always catch everything. Bing, Yandex, and other search engines are even less efficient at crawling JavaScript-heavy websites.

This can lead to important content being missed entirely.

-

Indexing Limitations

Even when crawlers manage to access JavaScript content, indexing delays are common. Google processes JavaScript in two waves: first, it crawls the raw HTML, and later, it attempts to render the JavaScript.

If your site depends on JavaScript for core content or metadata, that information may not get indexed quickly or at all. A study by Onely found that JavaScript issues prevent up to 32% of websites from being indexed properly.

-

URL and Routing Problems

Traditional websites use distinct URLs for each page, but many SPAs rely on hash fragments or client-side routing.

This can result in multiple pieces of content being served from a single URL, making it harder for search engines to differentiate between pages. Without clean, crawlable URLs, ranking opportunities are often lost.

-

Metadata and Tags

Search engines depend on title tags, meta descriptions, and structured data to understand content. In SPAs, these elements may be generated dynamically, and if they are not rendered on the server, crawlers may miss them.

Missing metadata can lower click-through rates and weaken relevance signals.

-

Performance Challenges

SPAs can also struggle with performance. Large JavaScript bundles and heavy client-side rendering can slow down load times.

Since page speed is a confirmed ranking factor, a poorly optimized SPA risks both lower rankings and higher bounce rates.

Speed and SEO, Together at Last

Get lightning-fast single-page experiences without losing rankings.

Contact UsHow Google Handles JavaScript and SPAs

Google has improved its ability to crawl and process JavaScript, but that does not mean Single Page Applications automatically perform well in search.

Understanding how Google handles JavaScript is key to optimizing your SPA for visibility.

-

Two-Wave Indexing

Google uses a two-step process for indexing JavaScript-heavy websites. In the first wave, Googlebot crawls the raw HTML and indexes whatever is immediately available. In the second wave, Google renders the JavaScript to process additional content and metadata.

This second step can take hours or even days, which creates delays in indexing. If your most important content depends on JavaScript, it may not show up in search results right away.

-

Limitations of Rendering

Even though Google can render JavaScript, it does not always render it accurately. Certain scripts, frameworks, or complex client-side features can break during the rendering process.

Other search engines, such as Bing and Yandex, are even less capable, which means your content might go completely undiscovered outside of Google.

-

Misconceptions About JavaScript SEO

A common belief is that since Google “can render JavaScript,” no extra optimization is needed. In practice, relying only on client-side rendering is risky.

Studies show that Google sometimes skips or misinterprets dynamically generated content, structured data, and meta tags. Without proper optimization, this can result in lower rankings and less organic visibility.

Why It Matters for SPAs

If your SPA depends on JavaScript to load critical content, metadata, or navigation, search engines may miss it. That is why approaches like server-side rendering, pre-rendering, or dynamic rendering are necessary for making SPAs search-friendly.

These methods ensure that content is visible to crawlers from the start, instead of waiting for a delayed rendering process.

Featured Article: Server-Side vs. Client-Side Rendering: Impact on SEO

Core Methods to Optimize SPAs for SEO

Optimizing a Single Page Application starts with choosing the right rendering strategy. Since most SPAs rely heavily on JavaScript, you need a method that makes content accessible to both users and search engines.

The three most common approaches are server-side rendering, pre-rendering, and dynamic rendering.

-

Server-Side Rendering (SSR)

Server-side rendering means the server generates the full HTML for each page request before sending it to the browser.

This approach ensures that crawlers see the same content as users without waiting for JavaScript to load. Frameworks like Next.js for React or Nuxt.js for Vue make SSR easier to implement.

The main advantage of SSR is speed. Search engines and users both receive complete content instantly. This reduces indexing issues and improves performance metrics such as Largest Contentful Paint (LCP).

The downside is that SSR can increase server load, and setting it up often requires significant development resources.

-

Pre-Rendering (Static Rendering)

Pre-rendering generates static HTML versions of pages ahead of time and serves them to crawlers and users. Tools like Gatsby, Rendertron, and Prerender.io are often used for this approach.

The benefit of pre-rendering is reliability. Since the HTML is already built, search engines have no problem crawling and indexing it. This is especially useful for websites with mostly static content. The drawback is scalability.

For very large SPAs with constantly changing data, pre-rendering may not be practical without frequent rebuilds.

-

Dynamic Rendering (Hybrid Approach)

Dynamic rendering is a hybrid method that serves different versions of a page depending on who is requesting it. When a user visits, the SPA loads normally with JavaScript. When a crawler visits, the server delivers a pre-rendered HTML version. Google has confirmed that dynamic rendering is acceptable as long as the content served to users and crawlers is the same.

The strength of dynamic rendering is flexibility. You can preserve the fast, interactive SPA experience for users while ensuring that search engines get HTML they can crawl.

The downside is maintenance. Running and maintaining rendering services can add complexity to your infrastructure.

Choosing the Right Approach

The best rendering strategy depends on your website. If your SPA is highly dynamic and user-driven, SSR may be the best choice. If you have mostly static pages, pre-rendering could be enough. For sites that need both scalability and SEO performance, dynamic rendering provides a balanced solution.

SPA SEO Best Practices

Choosing the right rendering method is only the first step. To maximize visibility, you also need to follow proven SEO best practices tailored for Single Page Applications.

These practices make it easier for search engines to crawl, index, and rank your content.

-

Use SEO-Friendly URLs and Routing

Every piece of content should live on its own unique, crawlable URL.

Avoid hash-based routing (for example, example.com/#/about) because search engines often treat these fragments as the same page.

Instead, use the History API or pushState to create clean, descriptive URLs (for example, example.com/about). Clean URLs improve both user experience and search rankings.

-

Optimize Meta Tags and Structured Data

Metadata such as titles, descriptions, and Open Graph tags should be available in the server-rendered HTML or pre-rendered version of the page.

Relying on JavaScript to generate these tags is risky, since crawlers may not always process them. Structured data in JSON-LD format should also be embedded to help search engines better understand your content and improve visibility in rich results.

-

Build Strong Internal Linking

Use standard HTML <a> tags for links instead of JavaScript onclick events. This ensures that crawlers can follow links and discover all your important pages.

Keep navigation menus visible in the HTML source code and include breadcrumb links where relevant. Internal linking also spreads authority across your site, helping important pages rank better.

-

Handle Lazy-Loaded Content Carefully

Lazy loading improves performance, but it can also hide content from crawlers if implemented incorrectly.

Make sure that critical content loads within the initial viewport or use Intersection Observer with proper fallback HTML. This way, both users and crawlers can access your key information without delays.

-

Improve Page Speed and Core Web Vitals

SPAs often load large JavaScript bundles, which can slow performance. Split bundles into smaller chunks, defer non-essential scripts, and use caching strategies.

Optimize images and videos with modern formats like WebP. Faster load times directly improve Core Web Vitals, which are ranking factors in Google Search.

-

Use Sitemaps and Robots.txt Effectively

An XML sitemap should include every important URL, even if routes are dynamically generated. This helps search engines discover your content faster.

Avoid blocking JavaScript or CSS files in robots.txt, since crawlers need these resources to render your pages correctly.

-

Prioritize Accessibility and Mobile-First Design

SPAs must work seamlessly across devices. Use semantic HTML and ARIA roles to improve accessibility for screen readers.

Mobile optimization is critical, since Google indexes mobile versions of content first. A mobile-friendly SPA not only improves usability but also strengthens your search performance.

Featured Article: Headless CMS and SEO: What You Need to Know in 2026

Tracking and Measuring SEO Performance for SPAs

Optimizing your SPA is only effective if you can measure the results. Tracking SEO performance in SPAs can be tricky because traditional analytics setups often miss interactions that do not trigger full-page reloads.

With the right configuration, you can still gather accurate insights into traffic, engagement, and conversions.

Why Tracking SPAs Is Different

On traditional websites, every new page load automatically triggers a pageview in analytics. In SPAs, users can move between different routes without refreshing the browser.

If analytics is not configured properly, these interactions will not register as separate pageviews. This can lead to incomplete or misleading data about user behavior.

Using Google Analytics 4 (GA4)

Google Analytics 4 is better equipped for SPA tracking than older versions because it allows for more flexible event-based measurement.

To track SPA routes as pageviews, you need to send custom events whenever the virtual URL changes. This ensures that GA4 records user navigation across different sections of the SPA.

Setting Up Google Tag Manager

Google Tag Manager (GTM) simplifies SPA tracking by listening for route changes and firing tags accordingly.

You can configure triggers that detect when the URL changes without a full reload and then send that data to GA4. This setup helps capture accurate pageview and engagement metrics, even when users never refresh the browser.

Tracking Conversions and Engagement

In addition to pageviews, you should track events such as button clicks, form submissions, and scroll depth. These interactions show how users engage with your content and can highlight areas that need improvement.

By mapping these events to conversion goals in GA4, you gain a clearer picture of how your SPA contributes to business outcomes.

Measuring SEO Success

Use Google Search Console alongside analytics to evaluate your SPA’s visibility. Monitor impressions, clicks, and indexing status for each route.

If some routes receive traffic but do not appear in search results, this may indicate rendering or crawlability issues. Combining Search Console data with GA4 insights gives you a full view of your SPA’s SEO performance.

Don’t Sacrifice Rankings for UX

With Nexa Growth, your SPA can be user-friendly and search-friendly.

Contact UsGoogle’s Official Guidance on SPA SEO

Google has published several recommendations for handling JavaScript and Single Page Applications.

While the search engine has improved its ability to process JavaScript, it still encourages developers to follow best practices that ensure content is discoverable and indexable.

Google’s Position on JavaScript

Google has confirmed that it can crawl and render JavaScript, but the process is not always perfect. Rendering requires more resources than processing plain HTML, which means delays and potential errors can occur.

Because of this, Google advises developers not to rely solely on client-side rendering for critical content or metadata.

Recommended Rendering Approaches

In its documentation, Google recognizes server-side rendering, pre-rendering, and dynamic rendering as valid methods for making SPAs search-friendly.

Dynamic rendering, in particular, is recommended when a site cannot be fully migrated to SSR or static rendering. Google stresses that the content served to crawlers and users must remain the same to avoid cloaking.

Common Pitfalls to Avoid

Google warns against using only hash-based URLs, since crawlers may not treat them as unique pages. It also advises against blocking JavaScript or CSS files in robots.txt, as these resources are necessary for rendering.

Another common issue is delaying the loading of key content until after user interaction, which can prevent it from being indexed.

Best Practices According to Google

Google encourages developers to test SPAs using tools like the URL Inspection tool in Search Console and the Mobile-Friendly Test.

These tools show how Google renders a page and highlight any missing content or errors. Regular testing helps ensure that updates to your SPA do not create new SEO issues.

Featured Article: Advanced Log File Analysis for SEO

Checklist: SPA SEO Optimization Steps

Optimizing a Single Page Application involves several moving parts. Use this checklist to ensure your SPA is both user-friendly and search-friendly.

-

Rendering and Content

- Choose an appropriate rendering strategy (SSR, pre-rendering, or dynamic rendering).

- Make sure critical content and metadata are available in the initial HTML.

- Test rendering with Google’s URL Inspection tool.

-

URLs and Routing

- Use clean, descriptive URLs instead of hash fragments.

- Ensure each route has a unique and crawlable URL.

- Update sitemaps to include all important routes.

-

Metadata and Structured Data

- Add static title tags and meta descriptions to every route.

- Include Open Graph and Twitter Card tags for better social sharing.

- Implement structured data in JSON-LD format.

-

Internal Linking and Navigation

- Use HTML <a> tags for all internal links.

- Keep navigation visible in the source code.

- Add breadcrumb navigation where appropriate.

-

Performance and Core Web Vitals

- Optimize JavaScript bundles with code splitting and caching.

- Compress images and use modern formats like WebP.

- Test performance regularly with PageSpeed Insights or Lighthouse.

-

Lazy Loading and Accessibility

- Ensure lazy-loaded content is still crawlable with fallback HTML.

- Use semantic HTML and ARIA roles for accessibility.

- Test the SPA for mobile-first indexing compatibility.

-

Tracking and Monitoring

- Configure GA4 with custom events to track virtual pageviews.

- Use Google Tag Manager to capture SPA route changes.

- Monitor indexing and search performance in Google Search Console.

Conclusion and Key Takeaways

Single Page Applications provide smooth, app-like experiences that users love, but they also present unique challenges for search engines.

Heavy reliance on JavaScript, dynamic routing, and delayed content loading can all make it harder for crawlers to properly index your site.

The good news is that with the right approach, SPAs can perform well in search results without sacrificing user experience.

The key is to select a rendering strategy that works for your site, whether that is server-side rendering, pre-rendering, or dynamic rendering, and then pair it with best practices.

Clean URLs, optimized metadata, strong internal linking, and fast performance all help improve visibility. Accessibility, structured data, and mobile-first design add another layer of SEO strength.

Finally, tracking your SPA with GA4, Google Tag Manager, and Search Console ensures that you can measure results and adjust quickly when issues arise.

By combining rendering strategies, technical best practices, and ongoing monitoring, you can create a Single Page Application that satisfies both users and search engines.

Own the First Page With Your SPA

Turn your single-page app into a ranking powerhouse with Nexa Growth.

Contact Us