- A robots.txt file is a text file that provides instructions to search engine bots about which pages they can or cannot access.

- It helps optimize the crawl budget and prevents non-public or duplicate pages from being indexed.

- Common mistakes to avoid include accidentally blocking the entire website or essential CSS and JavaScript files.

- Robots.txt should not be used as a security measure to protect sensitive data.

- You can use tools like Google Search Console to test and fix errors in your robots.txt file.

Search engines like Google crawl billions of web pages daily, deciding which ones to index and rank.

But not every page on your website needs to be indexed.

Some pages might contain sensitive data, duplicate content, or backend files that are not accessible in search results.

That’s where the robots.txt file comes in.

Robots.txt is a small but powerful text file that tells search engines which pages they can and cannot crawl.

Used correctly, it can improve your website’s SEO, enhance crawl efficiency, and prevent unnecessary exposure of private content.

But misused, it can accidentally block essential pages, harming your rankings—a mistake even major brands have made.

According to a 2023 study by Ahrefs, more than 17% of websites have crawlability issues due to misconfigured robots.txt files.

Another report from Semrush found that nearly 25% of websites block important JavaScript or CSS files, unknowingly damaging their search visibility.

These numbers highlight how a poorly configured robots.txt file can hurt SEO instead of helping it.

In this guide, we’ll break down everything you need to know about robots.txt, from how it works and why it matters to best practices, real-world examples, and common mistakes to avoid.

By the end, you’ll clearly understand how to use robots.txt to optimize your website for search engines while maintaining control over what gets crawled.

Let’s get started.

Your Competition Just Took Your Spot on Google!

Every day you wait, they move up—and you fall behind. Let’s reclaim your rankings NOW!

Contact UsWhat Is a Robots.txt File?

A robots.txt file is a simple text file placed in a website’s root directory to communicate with search engine crawlers.

It acts as a set of instructions, telling search engines which parts of a site they can or cannot access.

At its core, robots.txt uses the Robots Exclusion Protocol (REP), a standard that governs how search engines interact with web pages.

This protocol helps manage search engine bots’ access to specific URLs, preventing unnecessary crawling of certain areas—like admin pages, login sections, or private content.

For example, if an e-commerce website has thousands of product pages, the site owner might want to block out-of-stock product pages from being crawled.

Similarly, a blog with an extensive archive may want to prevent duplicate content issues by blocking pagination pages from being indexed.

How Search Engines Use Robots.txt

When a search engine bot (like Googlebot) visits a website, it first checks the robots.txt file.

If the file contains specific directives, the bot follows them before crawling the site’s content.

Here’s a simple breakdown of the process:

- The search engine bot arrives at yourdomain.com.

- It checks for

yourdomain.com/robots.txt. - It reads the instructions (if any) and determines which pages it can or cannot crawl.

- The bot proceeds to crawl the allowed pages while skipping the restricted ones.

A Simple Robots.txt Example

Here’s what a basic robots.txt file might look like:

User-agent: *

Disallow: /private/

Disallow: /wp-admin/

Allow: /public-content/

Sitemap: https://yourdomain.com/sitemap.xml

Explanation

User-agent: *→ Applies the rule to all bots.Disallow: /private/→ Blocks access to the /private/ directory.Disallow: /wp-admin/→ Prevents bots from crawling the WordPress admin area.Allow: /public-content/→ Ensures that this directory is crawlable.Sitemap:→ Provides the sitemap location to help bots discover essential pages.

When Should You Use a Robots.txt File?

Not every website needs a robots.txt file, but it’s helpful in several cases:

- Controlling crawl budget: If your site has thousands of pages, limiting unnecessary crawling can help search engines focus on important content.

- Preventing duplicate content issues: You can block search engines from crawling duplicate pages, preventing indexing conflicts.

- Protecting sensitive information: While robots.txt does not secure private data, it can discourage search engines from indexing login pages, admin panels, or staging sites.

- Optimizing SEO efforts: Proper use of robots.txt ensures that search engines focus on high-value pages, improving your rankings.

Robots.txt is a foundational tool in technical SEO, but it’s not foolproof. The next section’ll explore why robots.txt is crucial for SEO and what happens when it’s misconfigured.

Featured Article: A-Z SEO Glossary: Key Terms Every Marketer Should Know

Why Robots.txt Matters for SEO

Robots.txt is critical in technical SEO by helping search engines crawl and index websites more efficiently.

It improves site performance, prevents indexing issues, and ensures that only the most essential pages appear in search results.

However, a misconfigured robots.txt file can lead to disastrous SEO mistakes, such as blocking essential content from search engines.

Let’s explain why robots.txt is vital for SEO and how it impacts your site’s rankings.

-

Optimizing Your Crawl Budget

Search engines allocate a crawl budget for each website—the number of pages Googlebot (or any search bot) crawls in a given timeframe. For large websites, managing this budget is crucial.

Using robots.txt to prevent unnecessary pages from being crawled allows search engines to focus on the most valuable pages.

This can lead to faster indexing and better visibility in search results.

Example:

If an e-commerce store has thousands of product pages but also includes filter-based URLs like?color=red,?size=large, etc., robots.txt can block those dynamic URLs to prevent excessive crawling of similar content.User-agent: *

Disallow: /*?color=

Disallow: /*?size= -

Preventing Indexing of Non-Public or Duplicate Pages

Some pages on your website should not appear in search results, like admin panels, checkout pages, login pages, and duplicate content.

Robots.txt helps prevent these pages from being indexed, reducing thin content and duplication issues that can harm your rankings.

Example:

A membership-based site may want to block login and account pages from appearing in search engines.User-agent: *

Disallow: /login/

Disallow: /account/ -

Preventing JavaScript and CSS Blocking

Many site owners mistakenly block JavaScript and CSS files in robots.txt, thinking they are unnecessary for SEO.

However, Google needs access to these files to render your site and evaluate its usability properly.

Google’s John Mueller has repeatedly emphasized that blocking CSS or JavaScript can negatively impact rankings because Googlebot won’t be able to render your page correctly.

Example of a lousy robots.txt file (which you should avoid):

User-agent: *

Disallow: /wp-content/themes/

Disallow: /wp-includes/Instead, allow Googlebot access to CSS and JS files for better indexing and ranking.

-

Protecting Sensitive Data (But Not as a Security Measure)

One common misconception is that robots.txt can be used to “hide” private content.

While robots.txt prevents search engines from crawling a page, it does not prevent people from accessing it directly if they know the URL.

Example of a bad practice:

Some site owners try to block private customer data pages using robots.txt.User-agent: *

Disallow: /customer-data/This file is still publicly accessible, meaning anyone can manually visit

yourdomain.com/robots.txtand see which directories you’re trying to hide.If you need to secure private content, use password protection or proper server authentication, not robots.txt.

-

Improving Website Speed and Performance

By limiting unnecessary crawling, robots.txt reduces server load and helps improve website performance.

If your site gets high traffic from search bots, unnecessary crawling of non-essential pages can slow down the site for real visitors.

Example:

If you have an old blog archive with thousands of less valuable pages, you might want to prevent search engines from crawling them so they can prioritize newer, high-quality content.User-agent: *

Disallow: /old-archives/

When Robots.txt Can Hurt Your SEO

While robots.txt is helpful in controlling search bot behavior, a small mistake can cripple your SEO efforts.

-

Accidentally Blocking Your Entire Website

One of the most common SEO disasters is when site owners mistakenly block their entire website from crawling.

Example of a dangerous robots.txt file:

User-agent: *

Disallow: /This tells search engines not to crawl any pages, effectively removing your entire site from search results.

-

Blocking Important Pages That Should Be Indexed

Some businesses mistakenly block category pages, service pages, or other key landing pages, leading to a massive drop in rankings.

Example of an SEO-killing mistake:

User-agent: Googlebot

Disallow: /services/This prevents Google from crawling service pages, causing them to disappear from search results.

Robots.txt is a powerful but delicate tool.

It helps search engines crawl the right pages correctly, improving your rankings and site performance. But one wrong directive can tank your SEO.

Featured Article: Best Practices for Header Tags and Content Hierarchy

How Does a Robots.txt File Work?

A robots.txt file is a set of rules for search engine crawlers, instructing them which pages they can access.

While search engines like Google, Bing, and Yahoo follow these directives, it’s important to note that robots.txt does not enforce security—it simply acts as a crawler guideline.

Here’s a breakdown of how search engines interpret and follow robots.txt instructions.

The Process of Crawling and Following Robots.txt

When a search engine bot (also called a web crawler) arrives at a website, it follows these steps:

- Checking for a robots.txt file

- Before crawling any page, the bot first looks for a file at

yourdomain.com/robots.txt.

- Before crawling any page, the bot first looks for a file at

- Reading the directives

- If a robots.txt file exists, the crawler reads its rules to determine what it is allowed or disallowed from crawling.

- Following the specified rules

- If a directive blocks certain pages, the crawler avoids them. Otherwise, it proceeds to crawl the allowed content.

- Crawling the site accordingly

- The search bot indexes the permitted pages and may store them for ranking in search results.

Example of How Robots.txt Works in Action

Let’s say a website has the following robots.txt file:

User-agent: Googlebot

Disallow: /private/

Disallow: /admin/

Allow: /public-content/

How Googlebot Interprets This File

✅ Allowed: https://example.com/public-content/

❌ Blocked: https://example.com/private/

❌ Blocked: https://example.com/admin/

This means Googlebot will not crawl or index anything under the /private/ and /admin/ directories, but it will index /public-content/.

What Happens If There’s No Robots.txt File?

If a website does not have a robots.txt file, search engines assume they can crawl everything (unless restricted by meta robots tags or server-side rules).

However, not having a robots.txt file isn’t always a bad thing, especially for small websites that want all their content to be indexed.

Robots.txt Directives Explained

A robots.txt file comprises simple directives that instruct web crawlers on what to do. Here’s a breakdown of the most common ones:

-

User-agentDefines which search engine bot the rule applies to.

Example:User-agent: GooglebotThis applies rules only to Google’s web crawler.

To apply rules to all bots, use:

User-agent: *The asterisk (*) means the rule applies to all search engines.

-

DisallowBlocks search engines from crawling a specific page or directory.

Example:User-agent:

*Disallow: /private/This tells all bots not to crawl anything under

/private/. -

AllowExplicitly, search engines can crawl a specific page (helpful when blocking a directory but allowing an exception).

Example:User-agent: *

Disallow: /blog/

Allow: /blog/latest-post.htmlThis blocks all pages under

/blog/except/blog/latest-post.html. -

Crawl-delayTells crawlers to wait a certain number of seconds between requests (helpful in reducing server overload).

Example:User-agent: Bingbot

Crawl-delay: 10This tells Bingbot to wait 10 seconds before making another request.

Note: Google does not support

Crawl-delay. Instead, you can adjust Googlebot’s crawl rate in Google Search Console. -

SitemapIndicates the location of your website’s XML sitemap to help search engines find essential pages more efficiently.

Example:Sitemap: https://example.com/sitemap.xmlEven if you don’t use any other rules, including the

Sitemap:directive in robots.txt is a best practice.Does Robots.txt Completely Block a Page from Search Results?

Not necessarily. Even if you block a page in robots.txt, search engines can still display it in search results if other pages link to it.

For example, if

example.com/hidden-page/is blocked in robots.txt but another website links to it, Google might still display the URL in search results—without indexing its content.If you need to remove a page from search results, use a completely

noindexmeta tag instead.

How to Test If Your Robots.txt File Works Correctly

Testing your robots.txt file before assuming it’s working as intended is critical. Google provides a built-in tool to validate your robots.txt rules.

-

Use Google’s Robots.txt Tester

- Go to Google Search Console

- Navigate to Settings > Robots.txt Tester

- Enter a URL and check if it’s allowed or blocked

-

Check with Google’s URL Inspection Tool

- Go to Google Search Console

- Use the URL Inspection Tool

- It will tell you if a page is blocked by robots.txt

-

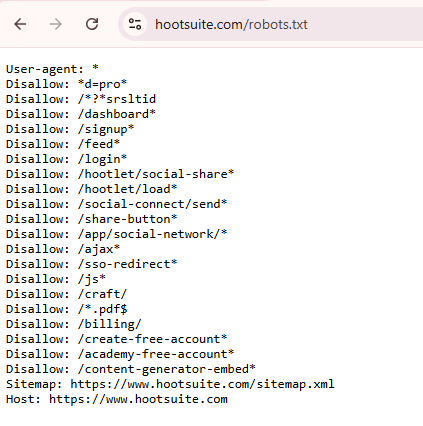

View Robots.txt File Manually

You can also visit your robots.txt file directly by going to:

📌https://yourdomain.com/robots.txtA well-structured robots.txt file is essential for guiding search engine crawlers and optimizing your website’s crawl efficiency.

However, it must be configured carefully—a small mistake can block important content or fail to prevent the crawling of irrelevant pages.

Google’s Algorithm is Changing—Are You Keeping Up?

If not, your rankings (and traffic) are slipping away. Stay ahead with Nexa Growth!

Contact UsCommon Robots.txt Mistakes to Avoid

A well-structured robots.txt file can significantly improve your site’s SEO, but one small mistake can block your entire website from search engines.

Many site owners unintentionally misconfigure their robots.txt file, which can lead to indexing issues, loss of rankings, and even a complete deindexation from Google.

Let’s review the most critical robots.txt mistakes and how to avoid them.

-

Accidentally Blocking Your Entire Website

This is one of the most damaging mistakes—blocking all search engines from crawling your site.

An incorrect robots.txt file that blocks everything:

User-agent: *

Disallow: /Fix:

If you want your site to be crawled, usually, remove theDisallow: /rule or specify only the pages that should be blocked.✅ Correct version (allow full crawling):

User-agent: *

Disallow:(An empty

Disallow:means no pages are blocked.)If you need to block only certain directories, be specific:

✅ Example of a properly configured robots.txt file:

User-agent: */

Disallow: /admin/

Disallow: /private -

Blocking Essential Pages That Should Be Indexed

Sometimes, businesses accidentally block key pages like product listings, category pages, or service pages, which hurts their rankings and traffic.

Example of an ineffective robots.txt configuration:

User-agent: *

Disallow: /services/

Disallow: /blog/In this case, all service and blog pages are blocked, preventing them from appearing in search results.

Fix:

Only block low-value or duplicate pages instead of entire sections.✅ Correct version:

User-agent: *

Disallow: /admin/

Disallow: /checkout/

Allow: /blog/

Allow: /services/ -

Blocking JavaScript and CSS (Hurting Core Web Vitals)

Many site owners mistakenly block JavaScript or CSS files, thinking they aren’t important for SEO.

However, Google needs access to JS and CSS files to render and index your site properly.

Incorrect robots.txt that blocks essential files:

User-agent: *

Disallow: /wp-includes/

Disallow: /assets/css/

Disallow: /assets/js/Fix:

Google has explicitly stated that blocking JS and CSS can harm rankings. You should allow search engines to crawl these files so they can render your site correctly.✅ Correct version (allowing CSS & JS):

User-agent: *

Allow: /assets/css/

Allow: /assets/js/ -

Using Noindex in Robots.txt (Deprecated by Google)

Previously, some SEO professionals used the Noindex directive in robots.txt to prevent pages from appearing in search results. However, Google stopped supporting Noindex in robots.txt in 2019.

Incorrect robots.txt using Noindex (no longer works):

User-agent: *

Disallow: /private/

Noindex: /private/Fix:

Instead of Noindex in robots.txt, use a meta robots tag in the HTML of the page you want to block.✅ Correct method (using meta robots in the page header):

<meta name="robots" content="noindex, nofollow"> -

Forgetting to Block Staging or Development Sites

If you’re running a staging website (for testing) or have a development site, you don’t want it appearing in search results.

Example of an unprotected staging site that gets indexed:

https://staging.yoursite.com/https://dev.yoursite.com/

Fix:

Use robots.txt to block all bots from crawling your staging environment:✅ Correct robots.txt for staging sites:

User-agent: *

Disallow: /Warning:

This blocks all crawlers, including Google, but it does not prevent public access. To fully protect a staging site, use password protection with.htaccess. -

Blocking Pages Instead of Using Canonical Tags

Many site owners mistakenly block duplicate pages using robots.txt when they should be using canonical tags.

Incorrect way to handle duplicate content:

User-agent: *

Disallow: /category/page2/Fix:

Instead of blocking duplicate content in robots.txt, indicate the preferred version on the page with a canonical tag.✅ Correct method (use canonical instead of Disallow):

This tells Google to prioritize the main category page without blocking crawling.

-

Not Including a Sitemap in Robots.txt

Your sitemap helps search engines find and index your important pages faster. You’re missing an easy SEO boost if you forget to add it in robots.txt.

Mistake: Not specifying a sitemap in robots.txt

User-agent: *

Disallow: /private/Fix:

Always include a sitemap reference in robots.txt to guide search engines.✅ Correct robots.txt with a sitemap:

User-agent: *

Disallow: /private/

Sitemap: https://example.com/sitemap.xml

Featured Article: The Ultimate Guide to URL Structures: SEO Best Practices & Future Trends

SEO Best Practices for Robots.txt

A properly optimized robots.txt file helps search engines crawl your site efficiently while keeping non-essential pages out of the index.

However, robots.txt should be strategically configured, not just set up and forgotten.

Here are the most effective SEO best practices to ensure your robots.txt file enhances search visibility without harming rankings.

-

Always Place the Robots.txt File in the Root Directory

Your robots.txt file must be located in your domain’s root directory for search engines to recognize it.

Incorrect Placement:

https://example.com/folder/robots.txt(This will not work.)https://example.com/blog/robots.txt(Bots will not check here.)

✅ Correct Placement:

https://example.com/robots.txt(Bots, check this location first.)

To verify if your robots.txt file is properly placed, simply visit:

https://yourdomain.com/robots.txt -

Be Specific When Blocking Pages—Avoid Overuse of Disallow

Blocking too many pages with

Disallow: /can harm SEO and prevent important content from ranking.Incorrect Practice (Overblocking important sections):

User-agent: *

Disallow: /blog/

Disallow: /services/

Disallow: /products/This prevents Google from indexing your blog, services, and product pages, which are likely critical for rankings.

Fix:

Instead of blocking entire sections, block only low-value or duplicate pages:✅ Good Practice (Allow essential sections to be indexed):

User-agent: *

Disallow: /checkout/

Disallow: /admin/

Disallow: /login/

Allow: /blog/

Allow: /services/ -

Allow CSS and JavaScript for Better Indexing

Google needs access to CSS and JavaScript to render your website properly. Blocking them can negatively impact rankings.

Incorrect Practice (Blocking JS and CSS):

User-agent: *

Disallow: /assets/js/

Disallow: /assets/css/Fix:

✅ Good Practice (Allowing CSS & JavaScript for proper rendering):User-agent: *

Allow: /assets/css/

Allow: /assets/js/Google’s John Mueller has confirmed that blocking CSS/JS can harm rankings because search engines won’t fully understand how the page should be displayed.

-

Always Include Your Sitemap for Better Crawlability

A sitemap.xml file helps search engines find and index important pages faster. Always reference your sitemap in robots.txt.

✅ Best Practice (Include sitemap in robots.txt):

User-agent: *

Sitemap: https://example.com/sitemap.xmlThis helps search engines crawl and index your site more efficiently.

-

Use Wildcards (*) and Dollar Signs ($) Properly

Wildcards (*) and end-of-URL markers ($) help precisely control what search engines block.

✅ Example (Blocking all PDF files from being indexed):

User-agent: *

Disallow: /*.pdf$✅ Example (Blocking all URLs with a query parameter ?session=):

User-agent: *

Disallow: /*?session= -

Avoid Blocking Pages That Contain Internal Links

If you disallow a page but still internally link to it, search engines may still index the page without actually crawling it, leading to orphaned URLs in search results.

Bad Practice (Blocking pages that are internally linked):

User-agent: *

Disallow: /blog/If other pages link to it

/blog/Search engines might still list the URL in search results without a meta description or page content.Fix:

Instead of blocking pages in robots.txt, use a meta robots tag with “noindex”:✅ Correct method (Meta robots noindex instead of blocking in robots.txt):

<meta name="robots" content="noindex, follow"> -

Keep Robots.txt Small and Simple

A bloated robots.txt file can confuse search engines and slow down crawling. Keep it concise, clear, and well-structured.

Incorrect Practice (Overcomplicated robots.txt file):

User-agent: *

Disallow: /folder1/

Disallow: /folder2/

Disallow: /folder3/

Disallow: /folder4/

Disallow: /folder5/

Disallow: /folder6/Fix:

✅ Good Practice (Simplified and organized robots.txt):User-agent: *

Disallow: /private/

Disallow: /admin/

Sitemap: https://example.com/sitemap.xml -

Test Your Robots.txt File Regularly

Misconfigurations in robots.txt can cause SEO disasters. Always test it using Google Search Console’s Robots.txt Tester.

How to Check Your Robots.txt File

- Google Search Console > Settings > Robots.txt Tester

- URL Inspection Tool – Check if a URL is blocked

- Visit

https://yourdomain.com/robots.txtto ensure it loads correctly

Robots.txt vs Meta Robots vs X-Robots – What’s the Difference?

When it comes to controlling how search engines interact with your website, robots.txt, meta robots tags, and X-Robots-Tag HTTP headers are three commonly used methods.

However, they serve different purposes and should be used strategically.

This section will break down the key differences, when to use each, and how they impact SEO.

What Is Robots.txt?

Robots.txt is a file placed in your website’s root directory that tells search engines which pages or sections they should not crawl.

It does not prevent indexing if a page is linked somewhere on the internet.

When to Use Robots.txt

- To prevent unnecessary crawling of certain sections (e.g., login pages, cart pages)

- To save crawl budget by blocking duplicate or non-important pages

- To block search bots from crawling specific files (e.g., scripts, filter pages)

Example of a Robots.txt File

User-agent: *

Disallow: /admin/

Disallow: /private/

Sitemap: https://example.com/sitemap.xml

Limitation: Robots.txt does NOT stop indexing—if other pages link to a disallowed page, Google might still index its URL.

What Is a Meta Robots Tag?

A meta robots tag is placed inside the <head> section of an HTML page to control whether search engines can index and follow links on that specific page.

When to Use Meta Robots Tags

- To prevent the indexing of a page without blocking crawlers

- To remove low-value pages from search results (without blocking internal linking)

- To control whether search engines follow links on a page

Example of a Meta Robots Tag

<meta name="robots" content="noindex, follow">

Directives in Meta Robots Tags

index:Allows the page to be indexednoindex:Prevents the page from being indexedfollow:Allows search engines to follow links on the pagenofollow:Prevents search engines from following links on the page

Limitation: Unlike robots.txt, meta robots tags require search engines to crawl the page before obeying the directive. If a page is disallowed in robots.txt, search engines won’t crawl it to see the meta robots tag.

What Is an X-Robots-Tag HTTP Header?

The X-Robots-Tag works like a meta robots tag, but instead of being placed in the HTML <head>, it is set in the HTTP response headers.

This allows you to control indexing for non-HTML files like PDFs, images, videos, or other downloadable files.

When to Use X-Robots-Tag

- To block the indexing of non-HTML content (PDFs, images, videos)

- To apply noindex or nofollow directives site-wide

- To prevent search engines from storing cached copies of certain files

Example of an X-Robots-Tag in an HTTP Header

HTTP/1.1 200 OK

X-Robots-Tag: noindex, nofollow

Limitation: The X-Robots-Tag requires server-level access, meaning it’s not as easily accessible as robots.txt or meta robots tags.

Comparison Table – Robots.txt vs Meta Robots vs X-Robots

| Feature | Robots.txt | Meta Robots | X-Robots-Tag |

| Prevents Crawling | ✅ Yes | ❌ No | ❌ No |

| Prevents Indexing | ❌ No (Google may still index) | ✅ Yes | ✅ Yes |

| Blocks Internal Links from Being Followed | ❌ No | ✅ Yes (nofollow) |

✅ Yes (nofollow) |

| Controls Indexing of Non-HTML Files | ❌ No | ❌ No | ✅ Yes |

| Requires Page Crawl to Work? | ❌ No | ✅ Yes | ✅ Yes |

| Requires Server Access? | ❌ No | ❌ No | ✅ Yes |

Which One Should You Use?

✅ Use Robots.txt when:

- You want to prevent unnecessary crawling of non-important pages

- You need to conserve crawl budget

- You don’t need to block indexing—only crawling

✅ Use Meta Robots Tags when:

- You want to stop search engines from indexing a specific page

- You want to control whether search engines follow or ignore links on a page

- The page should still be accessible to users and crawlers

✅ Use X-Robots-Tag when:

- You need to block the indexing of non-HTML files like PDFs or images

- You want more advanced control over indexing at a server level

How to Check and Validate a Robots.txt File

A misconfigured robots.txt file can block critical pages, prevent proper indexing, and negatively impact your search rankings.

That’s why it’s essential to regularly check and validate your robots.txt file to ensure it’s working as intended.

In this section, we’ll discuss the best methods and tools for checking, testing, and validating your robots.txt file.

-

Checking Your Robots.txt File Manually

The simplest way to check if your robots.txt file is live and accessible is to visit the following URL in your browser:

📌

https://yourdomain.com/robots.txtWhat to Look For

- The file loads correctly and isn’t showing a 404 error

- The syntax is properly formatted without extra spaces or incorrect directives

- The correct rules are applied to essential pages and directories

If your robots.txt file returns a 404 error, search engines may assume no restrictions and crawl everything on your website.

-

Using Google Search Console’s Robots.txt Tester

Google provides a built-in tool to check if your robots.txt file blocks essential pages.

How to Use It

- Go to Google Search Console

- Navigate to Settings > Robots.txt Tester

- Enter your robots.txt file and test a URL to see if it’s blocked

- If there are errors, Google will highlight them for corrections

This tool is helpful for:- Identifying syntax errors in your robots.txt file

- Checking if a specific page is blocked from Googlebot

- Testing changes before making them live

-

Using Google’s URL Inspection Tool

Another way to check if a page is blocked by robots.txt is to use Google’s URL Inspection Tool in Google Search Console.

- Go to Google Search Console

- Select URL Inspection

- Enter the page URL you want to check

- Look for Crawl allowed? status

If a page is blocked by robots.txt, Google cannot index it, even if it’s linked from other pages.

-

Using Third-Party Robots.txt Testing Tools

Several SEO tools offer advanced robots.txt testing features to ensure proper configuration.

Recommended Tools

- Semrush Site Audit – Checks robots.txt issues in a technical SEO audit

- Ahrefs Site Audit – Identifies crawling issues related to robots.txt

- Screaming Frog SEO Spider – Simulates search engine crawling behavior

- Robots.txt Checker by Ryte – Validates robots.txt syntax

These tools help detect:- Incorrect syntax that could be blocking search engines

- Unintended crawl restrictions

- Errors in disallow/allow directives

-

Checking Robots.txt in Bing Webmaster Tools

To ensure that Bing is properly crawling your site, you can check robots.txt via Bing Webmaster Tools.

- Sign in to Bing Webmaster Tools

- Navigate to Diagnostics & Tools > Robots.txt Tester

- Enter a URL and check if it’s blocked by robots.txt

Note: Google and Bing have slightly different crawling behaviors, so it’s good to check both.

-

Validating Robots.txt Syntax With Online Validators

If you suspect syntax errors in your robots.txt file, you can validate it using an online robots.txt validator.

Popular Robots.txt Validators

- Google’s Robots.txt Tester

- Ryte Robots.txt Validator

- SEOptimer Robots.txt Checker

These tools help detect:- Incorrect syntax (e.g., missing colons, typos)

- Unrecognized directives

- Conflicts between rules

Incorrect syntax can cause search engines to ignore your robots.txt file entirely, making these validators a critical part of your SEO workflow.

How to Fix Robots.txt Errors

If your robots.txt file has issues, follow these steps to fix and optimize it:

Step 1: Identify Errors Using a Validator

- Use Google Search Console, Ahrefs, or a robots.txt validator to detect syntax errors.

Step 2: Edit Your Robots.txt File

- Open your robots.txt file using a text editor or FTP access to your site.

- Make corrections based on errors detected.

Step 3: Test Your Changes Before Going Live

- Use Google’s Robots.txt Tester to check if the changes work correctly.

Step 4: Upload the Updated File to Your Root Directory

- Ensure it is accessible at

https://yourdomain.com/robots.txt.

Step 5: Request Google to Recrawl Your Site

- After updating robots.txt, request a recrawl in Google Search Console to speed up changes.

Featured Article: What Is On-Page SEO? A Beginner’s Guide for 2026

How to Create a Robots.txt File- A Step-by-Step Guide

Creating a robots.txt file is a simple yet essential step in optimizing your website for search engines.

Whether setting up a new site or refining an existing one, having a well-structured robots.txt file helps control crawler access, improve SEO, and manage search engine behavior.

This step-by-step guide will walk you through correctly creating, editing, and uploading a robots.txt file.

Step 1 – Open a Text Editor

Since robots.txt is a plain text file, you can create it using a simple text editor like:

- Notepad (Windows)

- TextEdit (Mac – in plain text mode)

- VS Code, Sublime Text, or Atom (for advanced users)

Tip: Avoid using word processors like Microsoft Word, as they add extra formatting that may cause errors.

Step 2 – Write Basic Robots.txt Directives

Start by defining the User-agent (search engine bot) and adding rules using Disallow and Allow directives.

Basic Robots.txt Example:

User-agent: *

Disallow: /admin/

Disallow: /private/

Allow: /public-content/

Sitemap: https://example.com/sitemap.xml

Explanation:

User-agent: *→ Applies the rules to all search enginesDisallow: /admin/→ Blocks the /admin/ section from being crawledDisallow: /private/→ Blocks /private/ pagesAllow: /public-content/→ Ensures that /public-content/ can be indexedSitemap:→ Specifies the location of the XML sitemap for better indexing

Tip: If you want to allow all search engines to crawl everything, use this:

User-agent: *

Disallow:

Step 3 – Adding Advanced Directives (Optional)

If your site has special crawling needs, you may need advanced robots.txt directives.

Example: Blocking Only Specific Bots

You can target specific bots while allowing others:

User-agent: Googlebot

Disallow: /test-page/

User-agent: Bingbot

Disallow: /temp/

Googlebot is blocked from/test-page/, but Bingbot is blocked from /temp/.

Example: Blocking Non-HTML Files (PDFs, Images, etc.)

To prevent search engines from indexing PDFs:

User-agent: *

Disallow: /*.pdf$

To block images from appearing in Google Image Search:

User-agent: Googlebot-Image

Disallow: /

Example: Allowing a Specific Page in a Blocked Folder

If you block a directory but want to allow one specific page inside it:

User-agent: *

Disallow: /blog/

Allow: /blog/best-article.html

Google will not crawl the blog folder except for best-article.html.

Step 4 – Save the Robots.txt File

File name: robots.txt

File format: Plain text (.txt)

Encoding: UTF-8 (default for most text editors)

Step 5 – Upload Robots.txt to Your Website

Once your robots.txt file is ready, upload it to the root directory of your site so that it can be accessed at:

📌 https://yourdomain.com/robots.txt

How to Upload

Via cPanel / File Manager:

- Log in to your web hosting account

- Go to File Manager

- Navigate to the root directory (

public_html/) - Upload the

robots.txtfile

Via FTP / SFTP:

- Use an FTP client like FileZilla or Cyberduck

- Connect to your server and navigate to /public_html/

- Upload the robots.txt file

Via WordPress / CMS:

If you use WordPress, you can install plugins like:

- Yoast SEO – Includes a built-in robots.txt editor

- All in One SEO – Allows you to edit robots.txt easily

Note: WordPress automatically generates a virtual robots.txt file if you don’t upload one. However, creating a custom robots.txt file gives you better control.

Step 6 – Test and Validate Your Robots.txt File

After uploading, test your robots.txt file to ensure no errors exist.

How to Test:

- Google Search Console’s Robots.txt Tester

- Go to Google Search Console

- Navigate to Settings > Robots.txt Tester

- Enter a URL and check if it’s blocked or allowed

- Manually Check It in Your Browser

- Open

https://yourdomain.com/robots.txt - Ensure it loads correctly without errors

- Open

- Use a Third-Party Validator

- Ryte Robots.txt Tester

- SEOptimer Robots.txt Checker

Step 7 – Submit an Updated Robots.txt File to Google

Whenever you change your robots.txt file, request Google to recrawl it.

How to Submit a New Robots.txt to Google

- Go to Google Search Console

- Navigate to Settings > Robots.txt Tester

- Click Submit New Robots.txt

- Google will recrawl your site with the updated rules

Tip: If Googlebot isn’t crawling your updated robots.txt file immediately, you can request a site recrawl via URL Inspection Tool > Request Indexing.

Conclusion

A well-optimized robots.txt file is a powerful tool for helping search engines crawl your site efficiently and preventing unnecessary or sensitive pages from being indexed.

When configured correctly, it can enhance SEO performance, improve crawl budget allocation, and safeguard non-public areas of your website.

However, misconfigurations can lead to major SEO issues, such as accidentally blocking critical pages or preventing search engines from rendering your site correctly.

That’s why it’s essential to:

- Regularly check and validate your robots.txt file using Google Search Console and other SEO tools

- Only block pages that shouldn’t be crawled—avoid overusing

Disallow - Ensure search engines can access essential resources like CSS and JavaScript

- Use meta robots tags or X-Robots-Tag for better indexing control

Following best practices and continuously monitoring your robots.txt file, you can optimize your website for search engines while maintaining complete control over what gets crawled and indexed.

You’re One SEO Strategy Away From Beating the Competition.

But only if you act NOW. Let’s build your winning strategy before they do!

Contact UsFAQs

1. Does robots.txt prevent search engines from indexing pages?

Instead, you can use a meta robots tag (noindex) inside the page <head> to prevent indexing.

<meta name="robots" content="noindex, nofollow">

2. What happens if I don’t have a robots.txt file?

Best practice: Include a robots.txt file with a sitemap reference.

User-agent: *

Sitemap: https://example.com/sitemap.xml