- Crawl errors occur when search engine bots fail to access a website’s content, leading to missed indexing and lower rankings.

- About 25% of websites face crawlability challenges.

- You can find and fix crawl errors using tools like Google Search Console’s Indexing > Pages report and URL Inspection Tool.

- Third-party SEO tools like Screaming Frog and Ahrefs can also be used for a more comprehensive analysis.

- To prevent future errors, conduct regular audits, maintain an optimized robots.txt file, and fix broken links and redirect chains.

In the intricate world of search engine optimization (SEO), crawl errors stand as silent barriers between your website and its desired audience.

These errors occur when search engine bots, like Google’s Googlebot, encounter issues accessing your site’s content, leading to missed indexing opportunities and diminished visibility.

Consider this: As of 2026, approximately 25% of websites face crawlability challenges due to issues such as poor internal linking and misconfigured robots.txt files.

Such obstacles can prevent search engines from effectively indexing your pages, directly impacting your site’s ranking potential.

Moreover, with search engines like Google using over 200 ranking factors in their algorithms, ensuring that your website is free from crawl errors has never been more critical.

A site riddled with these issues hampers search engine bots and frustrates users, leading to higher bounce rates and reduced engagement.

In this comprehensive guide, we’ll explore the nature of crawl errors, their various types, and, most importantly, provide actionable strategies to identify and rectify them.

By addressing these issues head-on, you can enhance your website’s crawlability, ensuring that both search engines and users can access your content seamlessly.

Your Competitors Won’t Know What Hit Them

Outrank, outperform, and outshine with data-driven SEO that gives you the edge.

Contact UsWhat Are Crawl Errors and Why Do They Matter?

Crawl errors occur when search engine bots, like Googlebot, try to access a webpage but fail due to technical issues.

These errors can be site-wide (preventing crawlers from accessing the entire website) or page-specific (affecting individual URLs).

To understand their importance, it’s crucial to recognize the role of search engine crawlers. Googlebot continuously scans the web, following links and indexing pages.

If Google encounters crawl errors on your site, some pages—or even your entire website—might not appear in search results, impacting your organic traffic.

Why Do Crawl Errors Matter for SEO?

Crawl errors can have significant consequences for your website’s performance, including:

-

Lost Search Rankings and Visibility

If Google cannot access key pages, those pages won’t be indexed.

Studies show that websites with persistent crawl errors experience a drop in rankings.

-

Wasted Crawl Budget

Google allocates a specific amount of resources to crawl each website, known as the crawl budget.

If bots repeatedly encounter errors, they may stop crawling important pages, leading to reduced indexation and missed ranking opportunities.

-

Poor User Experience

Broken pages, slow loading times, and access errors frustrate visitors.

According to a user behavior study, 88% of online consumers are less likely to return to a website after a bad experience

-

Decreased Revenue and Conversions

If essential landing pages, product pages, or blog posts fail to load, your site could suffer from lower engagement and fewer conversions.

How Do Crawl Errors Happen?

Crawl errors typically occur due to:

- Server issues (downtime, slow response times)

- Misconfigured robots.txt files (accidentally blocking crawlers)

- Broken internal links (pointing to non-existent pages)

- Redirect errors (chains, loops, or improper 301/302 usage)

Featured Article: How to Write Meta Descriptions: Tips and Examples 2026

Types of Crawl Errors and Their Causes

Now that we understand why crawl errors matter, let’s check out their types.

Crawl errors generally fall into two main categories: site-wide errors and URL-specific errors. Each type has distinct causes and can impact your website differently.

Site Errors (Critical Issues Affecting the Entire Website)

Site errors prevent search engines from accessing your website at all, which can severely impact indexing and rankings. These errors are considered high-priority and should be resolved immediately.

-

DNS Errors

(Domain Name System Issues Preventing Crawling)

What happens? Googlebot cannot resolve your website’s domain name, meaning it can’t find or crawl your site.

Common causes:

- Incorrect DNS configuration

- Website hosting issues

- Domain expiration

How to fix:

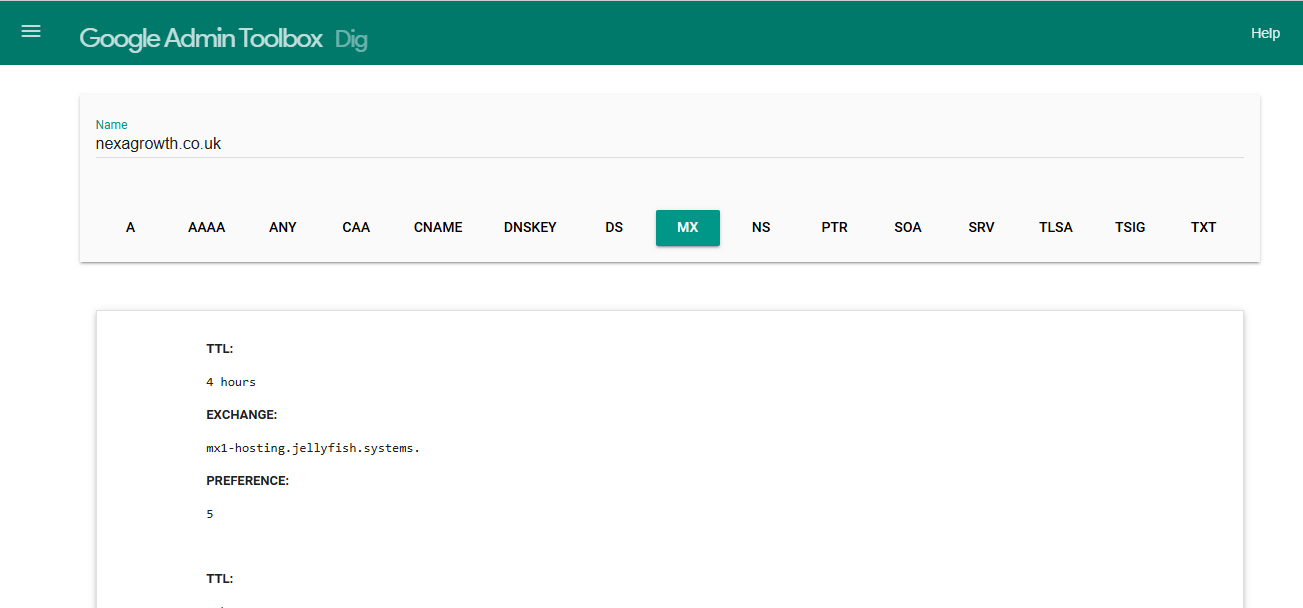

- Use a DNS checker (e.g., Google Dig) to verify domain resolution.

Google Dig - Contact your hosting provider if the issue persists.

-

Server Errors (5XX Status Codes)

(Your Website is Unreachable for Search Engine Crawlers and Users)

What happens? Googlebot tries to access your site but receives a 5XX error (e.g., 500 Internal Server Error, 502 Bad Gateway).

Common causes:

- Overloaded or slow server

- Misconfigured .htaccess file

- Faulty plugins or scripts

How to fix:

- Check server logs for error details.

- Optimize hosting resources and reduce server load.

- Disable conflicting plugins or scripts.

-

Robots.txt Errors

(Improperly Configured Robots.txt File Blocking Crawlers)

What happens? Your robots.txt file contains rules that block Googlebot from crawling certain parts of your site—sometimes unintentionally.

Common causes:

- Incorrect Disallow directives

- Syntax errors in robots.txt

- Blocked essential pages

How to fix:

- Use Google Search Console’s robots.txt Tester to validate your file.

- Ensure you’re not blocking critical pages unintentionally.

- Remove Disallow: / if mistakenly preventing full site access.

Featured Article: How Does SEO Work? (Crawling, Indexing, and Ranking Explained)

URL Errors (Affecting Individual Pages, Not the Entire Site)

Unlike site errors, URL errors occur on specific pages. These issues may prevent certain content from being indexed, reducing visibility for key pages.

-

404 Not Found Errors

404 Error (Pages That No Longer Exist or Have Moved Without Proper Redirects)

What happens? A URL points to a page that doesn’t exist, leading to a “404 Not Found” error.

Common causes:

- Deleted pages with no redirects

- Incorrect internal links

- External links pointing to removed pages

How to fix:

- Set up 301 redirects for deleted pages if relevant.

- Update internal links to point to existing pages.

- Monitor 404 errors in Google Search Console > Coverage Report.

-

Soft 404 Errors

(Pages That Appear Empty or Irrelevant but Return a 200 Status Code)

What happens? A page lacks meaningful content, but instead of returning a 404 error, it incorrectly returns a 200 OK response.

Common causes:

- Thin or low-quality content

- Improperly handled deleted pages

- Faulty CMS settings generating blank pages

How to fix:

- Ensure pages contain valuable content.

- Properly return 404 or 410 status codes for non-existent pages.

- Use Google’s URL Inspection Tool to confirm response codes.

-

403 Forbidden Errors

(Restricted Access Preventing Google from Crawling Certain Pages)

What happens? Googlebot is blocked from accessing a page due to permission settings.

Common causes:

- Incorrect file permissions

- Login-required pages

- Firewall or security restrictions

How to fix:

- Adjust file permissions to allow search engine access.

- Avoid requiring login for publicly accessible content.

- Whitelist Googlebot in security settings.

-

Redirect Errors (Loops and Chains)

Screaming Frog’s Webpage (Incorrect Redirects Causing Endless Loops or Long Chains)

What happens? When a page redirects multiple times (redirect chain) or loops back to itself (redirect loop), search engines get stuck.

Common causes:

- Improper 301/302 redirect implementation

- Plugin conflicts (especially in WordPress)

- Redirecting a URL to itself

How to fix:

- Use tools like Screaming Frog to check for redirect chains.

- Fix excessive redirects by pointing URLs directly to the final destination.

- Avoid meta refresh redirects, which can confuse crawlers.

-

Not Followed and Access Denied Errors

(Crawlers Are Unable to Follow Links or Access Content)

What happens? Googlebot encounters links it cannot follow or restricted content it cannot crawl.

Common causes:

- JavaScript-based navigation preventing bot access

- nofollow attributes incorrectly applied

- Blocked scripts, images, or CSS files

How to fix:

- Ensure important pages are accessible via HTML links.

- Allow Google to crawl CSS and JavaScript files in robots.txt.

- Avoid overusing nofollow on internal links.

Identifying and fixing crawl errors is crucial for maintaining a healthy, indexable website.

The key is to monitor these issues regularly and address them promptly to avoid ranking drops and search engine de-indexing.

In the next section, we’ll explore how to find crawl errors using tools like Google Search Console, Screaming Frog, and site audits.

Featured Article: Best Practices for Header Tags and Content Hierarchy

How to Find Crawl Errors Effectively

Fixing crawl errors starts with identifying them.

If search engines struggle to access your website, you’ll need reliable tools to detect the issues before they impact your rankings.

Fortunately, several tools help monitor crawlability, track errors, and provide actionable insights.

-

Using Google Search Console (GSC) to Identify Crawl Errors

Google Search Console (GSC) is the most powerful free tool for detecting crawl issues. It provides real-time reports on how Googlebot interacts with your site, highlighting errors that need fixing.

How to Find Crawl Errors in GSC

- Sign in to Google Search Console and select your property.

- Navigate to Indexing > Pages to check indexed vs. non-indexed pages.

- Scroll down to the Why Pages Aren’t Indexed section.

- Look for errors such as:

- Server Errors (5xx)

- Blocked by robots.txt

- Not Found (404)

- Crawled – currently not indexed

- Redirect errors

- Click on each error type to see affected URLs.

Using the URL Inspection Tool

GSC also offers a URL Inspection Tool, which allows you to check individual pages for indexing issues.

- Enter a URL in the search bar.

- Click Test Live URL to see real-time crawl status.

- Review coverage issues, blocked resources, or indexing problems.

Pro Tip: If a page isn’t indexed, use the Request Indexing feature after fixing the issue.

-

Running a Full Site Audit With SEO Tools

While Google Search Console provides crawl reports directly from Googlebot, third-party SEO tools offer additional insights.

Best SEO Tools for Detecting Crawl Errors

These tools conduct deep crawls, simulating how search engines navigate your site:

Tool Features Screaming Frog Identifies broken links, redirects, server errors, and robots.txt issues Ahrefs Site Audit Detects crawlability problems, orphan pages, and slow-loading content Semrush Site Audit Analyzes indexability, internal linking issues, and duplicate content Sitebulb Provides visual crawl maps to diagnose accessibility problems How to Perform a Crawl Audit With Screaming Frog

- Download and install Screaming Frog.

- Enter your website URL and start the crawl.

- Once completed, review these key reports:

- Response Codes (Check for 404, 500 errors)

- Blocked by robots.txt (Ensure Googlebot isn’t blocked)

- Redirect Chains & Loops (Fix excessive redirects)

- Orphan Pages (Find pages without internal links)

- Export reports and prioritize issues for fixes.

Pro Tip: Run Screaming Frog in Googlebot mode to simulate real search engine behavior.

-

Log File Analysis: The Advanced Approach

For larger websites, log file analysis provides deeper insights into how search engines crawl your site.

What Are Log Files?

Log files record every request made to your website, including search engine bot visits. By analyzing them, you can see:

- Which pages Googlebot crawls most often

- Crawl budget distribution

- Pages ignored by Googlebot

- Server response times

How to Analyze Log Files for Crawl Errors

- Access server logs from your hosting provider.

- Use tools like Screaming Frog Log File Analyzer or Splunk.

- Identify:

- 404 errors frequently requested by bots

- Slow-loading pages with long response times

- Crawl budget wastage on non-important URLs

- Optimize your website’s structure based on findings.

Pro Tip: If Google is crawling unnecessary pages (e.g., admin URLs, internal search pages), use robots.txt or noindex directives to guide bots efficiently.

-

Manually Checking Your Website for Crawl Issues

Beyond automated tools, a manual audit can help uncover crawl issues missed by software.

Checklist for a Manual Crawlability Audit

- Check robots.txt (Ensure important pages are not blocked)

- Verify XML Sitemap (All crucial pages should be included)

- Test Mobile Friendliness

- Examine Page Speed (Google PageSpeed Insights)

- Check Internal Linking (Ensure pages are properly connected)

Crawl errors can silently harm your SEO performance if left unchecked.

By leveraging Google Search Console, SEO audit tools, log file analysis, and manual checks, you can detect and resolve crawlability issues before they impact your rankings.

Be the Brand Everyone Finds First

Dominate Google, attract the right audience, and convert like never before. Let’s grow together!

Contact UsHow to Fix Crawl Errors for Better SEO

Now that you know how to find crawl errors, it’s time to fix them.

Addressing crawl issues ensures your website is fully accessible to search engines, improving your chances of ranking higher in search results.

Below, we’ll go through actionable solutions for each type of crawl error.

-

Fixing Site-Wide Errors (Critical Issues)

Problem: Googlebot cannot resolve your domain due to DNS configuration issues.

Solution:- Check your domain’s status using Google Dig.

- Contact your hosting provider to confirm DNS settings are correct.

- Ensure your domain registration hasn’t expired.

- Reduce DNS lookup time by switching to a faster DNS provider like Cloudflare or Google Public DNS.

-

Fixing Server Errors (5XX Status Codes)

Problem: Googlebot tries to access your site but encounters server-related issues (e.g., 500 Internal Server Error, 502 Bad Gateway).

Solution:- Check your server logs to identify the cause of failures.

- Optimize server response time by upgrading to a faster hosting plan.

- Reduce server load by compressing images and enabling caching (use CDN services like Cloudflare).

- Ensure PHP, databases, and CMS plugins are up to date.

- If using WordPress, disable conflicting plugins and test performance.

-

Fixing Robots.txt Errors

Problem: Important pages are blocked by robots.txt, preventing Google from crawling them.

Solution:- Open your robots.txt file

(yourdomain.com/robots.txt). - Ensure you’re not blocking necessary pages. Example of incorrect blocking:

Disallow: /This prevents Google from crawling the entire website. Remove it if unintended.

- Use Google’s Robots.txt Tester to verify your settings.

- If Googlebot is blocked, change the file to:

User-agent: Googlebot

Allow: /Now, let’s look at how you can fix URL-specific errors

- Open your robots.txt file

-

Fixing 404 Not Found Errors

Problem: A page is missing, causing users and search engines to land on a 404 error page.

Solution:- Redirect deleted pages to relevant alternatives using 301 redirects.

- Update any broken internal links pointing to 404 pages.

- Use Google Search Console to find and fix affected URLs under Coverage > Not Found (404).

Best Practice: If a page is permanently gone and has no relevant alternative, let it return a 410 Gone status instead of a 404 to signal permanent removal. -

Fixing Soft 404 Errors

Problem: A page is thin or empty but still returns a 200 OK status instead of a 404 error.

Solution:- Ensure the page has valuable content before keeping it indexed.

- If the page is unnecessary, set it to 404 or 410 status.

- Use Google’s URL Inspection Tool to check whether Google recognizes the page as a Soft 404.

-

Fixing 403 Forbidden Errors

Problem: Googlebot is blocked from accessing certain pages due to permission restrictions.

Solution:- Adjust file and folder permissions to allow bot access (chmod 644 for files, 755 for directories).

- Ensure Googlebot is not blocked by firewall settings or security plugins.

- If the page requires login credentials, add an exception for Googlebot or allow guest access for indexing.

-

Fixing Redirect Errors (Loops and Chains)

Problem: Redirect loops prevent Google from reaching the final destination, or long redirect chains slow down crawling.

Solution:- Use Screaming Frog SEO Spider to detect redirect loops or excessive redirect chains.

- Fix errors by pointing all redirects directly to the final destination instead of chaining multiple redirects together.

- Replace 302 temporary redirects with 301 permanent redirects where applicable.

Best Practice: Keep redirect chains under 2 hops to avoid crawl budget waste.

-

Fixing “Not Followed” and “Access Denied” Errors

Problem: Googlebot cannot follow certain links or is blocked from accessing important content.

Solution:- Ensure internal links use proper HTML anchor tags (<a href=””>) instead of JavaScript-based navigation.

- Remove unnecessary nofollow attributes from important pages.

- Allow Google to crawl CSS and JavaScript files by updating your robots.txt:

User-agent: Googlebot

Allow: /*.css$

Allow: /*.js$ -

Managing Crawl Budget to Prevent Future Errors

Problem: Googlebot spends too much time crawling unnecessary pages, leading to slow indexing of important content.

Solution:- Block low-value pages in robots.txt (e.g., admin pages, internal search results).

- Use the “noindex” meta tag for pages that shouldn’t appear in search results:

<meta name="robots" content="noindex, follow">- Improve site architecture so essential pages are no more than 3 clicks from the homepage.

- Reduce duplicate content by implementing canonical tags (rel=”canonical”).

Best Practice: Regularly check Google Search Console > Crawl Stats to monitor crawl frequency.Crawl errors can negatively impact search rankings, waste your crawl budget, and prevent Google from properly indexing your website.

By proactively monitoring Google Search Console, conducting regular site audits, and optimizing your technical SEO, you can maintain a healthy, crawlable website that ranks higher in search results.

Featured Article: The Ultimate Guide to URL Structures: SEO Best Practices & Future Trends

Preventing Future Crawl Errors and Monitoring Crawlability

Fixing crawl errors is just the beginning.

To ensure long-term success in SEO, you need a proactive approach to preventing future errors and monitoring your website’s crawlability.

This section will cover best practices to keep your site optimized for search engines while minimizing crawl issues.

-

Implement Regular Crawl Audits

Performing routine site audits helps detect crawl issues before they impact rankings. Set a schedule to check for errors using tools like:

- Google Search Console (Monthly) – Review the Pages > Why Pages Aren’t Indexed

- Screaming Frog (Quarterly) – Run a deep crawl to detect broken links, redirects, and blocked resources.

- Ahrefs/Semrush Site Audit (Quarterly) – Check for technical SEO issues affecting crawlability.

- Log File Analysis (Annually) – Analyze crawl behavior to optimize your crawl budget.

Best Practice: Set up Google Search Console email alerts to be notified of critical crawl errors in real-time. -

Optimize Website Structure for Better Crawling

A well-structured website makes it easier for search engines to crawl and index your content.

Best Practices for Site Architecture

- Ensure important pages are within 3 clicks from the homepage.

- Use a flat site structure (avoid deep nesting of pages).

- Improve internal linking to help crawlers discover pages faster.

- Use breadcrumbs to enhance site navigation for both users and search engines.

Example of an SEO-Friendly Site Structure:

Homepage → Category Page → Subcategory Page → Product/Article PageInstead of:

Homepage → Category → Subcategory → Another Subcategory → Article (Too Deep!)Pro Tip: Run an internal linking audit with Screaming Frog to find orphaned pages (pages with no inbound links).

-

Maintain an Updated XML Sitemap

A properly configured XML sitemap ensures that search engines can efficiently find and index your important pages.

Best Practices for XML Sitemaps

- Include only index-worthy pages (exclude duplicate or noindex pages).

- Keep your sitemap updated with new and removed pages.

- Submit your sitemap in Google Search Console under Sitemaps > Add New Sitemap.

- Use a dynamic sitemap generator if you publish new content frequently (WordPress users can use the Rank Math or Yoast SEO plugin).

Pro Tip: Test your sitemap for errors using Google’s Sitemap Testing Tool. -

Ensure Fast Page Loading Speeds

- Slow-loading pages can cause crawl delays, leading to incomplete indexing. Google’s

- Cumulative Layout Shift (CLS) and Largest Contentful Paint (LCP) are now ranking factors, making speed optimization more critical than ever.

How to Improve Page Speed

- Enable GZIP compression to reduce page size.

- Optimize images using next-gen formats like WebP.

- Minimize HTTP requests by reducing unnecessary scripts and CSS files.

- Use a Content Delivery Network (CDN) like Cloudflare or AWS.

- Reduce server response times by upgrading to a better hosting provider.

Best Practice: Test your website’s speed using Google PageSpeed Insights and aim for an LCP score under 2.5 seconds. -

Improve Robots.txt and Crawl Budget Management

Google allocates a crawl budget to each website, which determines how many pages Googlebot will crawl per session.

If unnecessary pages consume your crawl budget, important pages may not be crawled efficiently.

How to Optimize Your Crawl Budget

- Block low-value pages in

robots.txt(e.g., admin pages, search results, login pages). - Avoid duplicate content by using canonical tags

(rel="canonical"). - Use “nofollow” on unimportant links to prevent Google from wasting crawl budget.

- Remove unnecessary URL parameters in Google Search Console under Settings > Crawl Parameters.

- Fix broken links to prevent wasted crawl attempts on non-existent pages.

Example of an Optimized robots.txt File:

User-agent: Googlebot

Disallow: /wp-admin/

Disallow: /search?q= Allow: /

Pro Tip: Check how Googlebot is crawling your site using Google Search Console > Crawl Stats. - Block low-value pages in

-

Monitor Google Search Console for Indexing Issues

Google Search Console provides ongoing insights into how well Google is crawling and indexing your site.

What to Monitor in GSC

- Index Coverage Report: Identify pages that aren’t being indexed and understand why.

- Crawl Stats Report: Track Googlebot’s activity on your site.

- Mobile Usability Report: Ensure that your site is mobile-friendly for better rankings.

- Security and Manual Actions Report: Check for Google penalties or security issues.

Best Practice: Set up Search Console notifications to get alerted about indexing problems.

-

Keep Your Website Free of Broken Links

Broken links (404 errors) waste crawl budget and frustrate users. Google prioritizes well-maintained, accessible websites in search rankings.

How to Monitor and Fix Broken Links

- Use Screaming Frog SEO Spider to find broken links.

- Fix or redirect 404 pages using 301 redirects.

- Update internal links pointing to deleted pages.

- Check external backlinks and contact webmasters to fix broken links pointing to your site.

Pro Tip: Use Ahrefs’ Broken Link Checker to find and reclaim lost backlinks.

Final Thoughts

Crawl errors can negatively impact rankings and prevent Google from properly indexing your content.

By following proactive monitoring strategies, optimizing site structure and speed, and managing your crawl budget efficiently, you can ensure search engines index your most valuable pages.

Key Takeaways:

- Regularly check Google Search Console for crawl errors.

- Optimize internal linking and site structure for better crawlability.

- Use a dynamic XML sitemap to help Google find important pages.

- Monitor page speed and server uptime to prevent crawl delays.

- Fix broken links and avoid redirect chains to optimize crawl efficiency.

By staying ahead of crawl issues, your website will remain search engine-friendly, leading to better indexing, higher rankings, and increased organic traffic.

Get Your Brand, Front and Center.

Don’t let your competition steal the spotlight. Get the SEO boost you need to stay ahead.

Contact UsFAQ’s

1. What are crawl errors, and why do they matter for SEO?

2. How can I check for crawl errors on my website?

- Go to Indexing > Pages in GSC.

- Review the section labeled Why Pages Aren’t Indexed to identify issues.

- Use the URL Inspection Tool to check specific pages for crawlability issues.

Additionally, tools like Screaming Frog SEO Spider, Ahrefs Site Audit, and SEMrush can help detect crawl issues such as broken links, redirect loops, and blocked pages.

3. How do I fix 404 errors and prevent them from affecting my rankings?

- Use 301 redirects for deleted or moved pages, pointing them to relevant existing content.

- Update internal links to ensure they do not lead to broken pages.

- Check for broken backlinks from external websites and request updates or implement redirects.

- If the page is permanently removed with no replacement, let it return a 410 Gone status instead of 404.

4. What is the best way to prevent future crawl errors?

- Perform regular crawl audits using Google Search Console and tools like Screaming Frog.

- Optimize your robots.txt file to avoid blocking essential pages.

- Maintain an up-to-date XML sitemap to help search engines find important pages.

- Fix redirect chains and broken links promptly.

- Monitor your site speed and server uptime to prevent Googlebot timeouts.

Regularly maintaining your website’s technical SEO will ensure smooth crawlability and improved search rankings.