- JavaScript SEO involves optimizing JavaScript-heavy websites for search engines.

- Search engines like Google use a two-stage process (crawling and rendering) which can cause delays in a site’s visibility.

- Common problems include blocked JavaScript files, unlinked pages, and slow script execution.

- Solutions include using standard HTML <a> tags for internal linking and optimizing file sizes.

- Advanced strategies involve using Edge SEO and choosing Server-Side Rendering (SSR) for better performance.

JavaScript has transformed how websites function, making them more dynamic, interactive, and engaging.

JavaScript powers a significant portion of the modern web, from single-page applications (SPAs) to advanced animations and real-time updates.

Over 98% of all websites use JavaScript in some capacity, according to W3Techs.

However, as much as JavaScript enhances user experience, it also introduces unique challenges for search engine optimization (SEO).

Unlike traditional HTML-based websites, JavaScript-heavy sites require additional processing by search engines, making it harder for them to crawl, render, and index content efficiently.

A study by Onely found that Google may take days or even weeks to index JavaScript content, significantly impacting organic visibility.

This is where Technical SEO for JavaScript comes into play. If your JavaScript isn’t optimized correctly, search engines may fail to recognize key content, leading to lower rankings, decreased traffic, and poor user engagement.

This guide will walk you through everything you need to know about Technical SEO for JavaScript, including:

- How search engines crawl, render, and index JavaScript

- Common JavaScript SEO challenges and how to fix them

- Best practices to ensure Google can access and understand your content

- Advanced JavaScript SEO techniques to enhance performance and rankings

By the end, you’ll have a solid, actionable strategy to optimize your JavaScript-powered website for better search rankings, faster indexing, and improved organic traffic.

Let’s get started.

Make Google Work for You.

Your website deserves more than just traffic—it deserves the right visitors. Let’s get you there!

Contact UsWhy Should You Care About JavaScript SEO?

Many major websites rely on JavaScript frameworks like React, Angular, and Vue.js, yet they struggle with SEO pitfalls such as delayed indexing, poor Core Web Vitals scores, and wasted crawl budget.

Even Google’s developers have acknowledged that JavaScript poses technical challenges for SEO, making it crucial for businesses to adopt best practices to ensure their content remains visible in search results.

Here’s why JavaScript SEO matters:

- More than 50% of Web Pages Use JavaScript for Critical Content. That content is invisible if Google can’t crawl or render it correctly.

- Google renders JavaScript in a Two-Stage Process, leading to delays in indexing compared to traditional HTML.

- JavaScript Can Negatively Affect Page Speed & Core Web Vitals, impacting rankings since Google considers page experience a key ranking factor.

- JavaScript SEO is No Longer Optional – With Google’s shift toward mobile-first indexing and JavaScript-heavy frameworks dominating web development, understanding how to optimize JavaScript for search engines is critical.

What Is JavaScript SEO?

JavaScript SEO optimizes JavaScript-heavy websites to ensure search engines can efficiently crawl, render, and index their content.

Unlike traditional HTML-based websites, which search engines can quickly parse, JavaScript websites require additional processing that can create indexing delays, impact rankings, and even make key content invisible to Google.

Why Is JavaScript SEO Important?

As JavaScript frameworks like React, Angular, and Vue.js dominate modern web development, the risk of SEO issues has increased.

A study by Onely found that Googlebot often struggles to process JavaScript correctly, leading to incomplete indexing and ranking issues. This is particularly concerning because:

- JavaScript is used on 98% of all websites.

- Google renders JavaScript in a two-stage process, often leading to delayed indexing.

- Sites with unoptimized JavaScript can experience lower rankings and less organic traffic.

How JavaScript Impacts SEO

To understand why JavaScript SEO is critical, let’s break down how search engines typically interact with JavaScript-powered websites:

- Crawling – Googlebot visits your website and attempts to discover new and updated pages.

- Rendering – Google processes and executes JavaScript to see what content appears.

- Indexing – Google decides which content is valuable and stores it in its search index.

If JavaScript prevents Google from properly executing any of these steps, your content might never appear in search results, even if it’s visible to users in a browser.

JavaScript SEO vs. Traditional SEO

| Factor | Traditional SEO (HTML) | JavaScript SEO |

| Crawlability | Google can read HTML instantly | Google may need extra processing to execute JavaScript |

| Rendering | No extra rendering needed | Requires Google to render JavaScript before indexing |

| Indexing Speed | Immediate | Often delayed |

| Technical Complexity | Simple HTML optimizations | Requires server-side or dynamic rendering, pre-rendering, or structured data |

JavaScript-heavy websites can experience longer indexing times, missed content, and ranking fluctuations without proper optimization.

What Happens If JavaScript SEO Is Ignored?

Ignoring JavaScript SEO can lead to significant drops in organic visibility. Here’s what could go wrong:

- Google Fails to Index Key Content – If JavaScript blocks essential content from being crawled, it won’t appear in search results.

- Slow Load Times Affect Rankings – Heavy JavaScript execution slows rendering, hurting Core Web Vitals and SEO performance.

- Duplicate Content Issues – Poor JavaScript implementations can create multiple versions of the same page, causing canonicalization issues.

- JavaScript Redirects Cause Confusion – If implemented incorrectly, Googlebot may not follow JavaScript-based redirects properly.

Who Needs JavaScript SEO?

JavaScript SEO is essential if your website uses React, Angular, Vue.js, or any other JavaScript framework. It’s particularly crucial for:

- E-commerce sites with JavaScript-based product filters and dynamically generated content

- Single Page Applications (SPAs) where content loads dynamically without full-page refreshes

- News and content publishers using JavaScript to enhance interactivity

- Web apps and SaaS platforms relying on JavaScript-heavy functionality

By implementing JavaScript SEO best practices, you can ensure that your website remains search-friendly, ranks higher, and continues to attract organic traffic.

Featured Article: How Does SEO Work? (Crawling, Indexing, and Ranking Explained)

How Google Crawls, Renders, and Indexes JavaScript

To optimize JavaScript SEO effectively, you must understand how Googlebot processes JavaScript content. Unlike traditional HTML-based websites, where Google can instantly crawl and index pages, JavaScript requires extra steps, making the process more complex and sometimes delayed.

Google follows a three-stage process to interpret JavaScript content:

- Crawling – Googlebot discovers URLs and fetches the initial HTML document.

- Rendering – Google executes JavaScript to display additional content.

- Indexing – Google stores the rendered content in its index for ranking.

If these steps fail, your content may not be indexed appropriately, leading to lower rankings and visibility issues.

Stage 1: Crawling – Can Google Find Your JavaScript Content?

When Googlebot visits your website, it fetches the initial HTML source code. This stage is straightforward for traditional HTML sites but becomes more complex for JavaScript-heavy pages.

Potential Issues in JavaScript Crawling:

- Blocked JavaScript Files – Google won’t execute if your robots.txt file blocks JavaScript.

- Unlinked Pages – Google may struggle to discover if JavaScript-generated content isn’t linked via traditional

<a>tags. - Heavy JavaScript Files – Large scripts slow down crawling, affecting Google’s ability to fetch all content efficiently.

How to Ensure Google Can Crawl Your JavaScript Content:

- Use the Google Search Console URL Inspection Tool to check what Google sees.

- Make sure JavaScript files aren’t blocked in robots.txt.

- Use internal linking with standard HTML

<a>tags for better discoverability. - Optimize JavaScript file sizes to improve crawling efficiency.

Stage 2: Rendering – Can Google See Your JavaScript Content?

Rendering is the most critical step for JavaScript SEO.

Unlike static HTML, where content is immediately available in the source code, JavaScript often loads content dynamically, meaning Googlebot has to execute JavaScript before it can “see” the content.

How Google Renders JavaScript Pages:

- Googlebot downloads the HTML file and checks for any

<script>tags. - If JavaScript is required to load content, Google adds the page to a render queue.

- Google executes JavaScript in a headless Chromium browser to generate the final, rendered version of the page.

- Google indexes the rendered content, but this can take additional time.

Why Rendering Can Delay Indexing:

- Googlebot’s Rendering Queue – Google processes JavaScript separately, which means content might not be indexed immediately.

- Heavy JavaScript Execution – Rendering takes longer if your scripts are too complex or resource-heavy.

- Client-Side Rendering (CSR) Issues – If JavaScript content loads dynamically but isn’t appropriately cached, Google may not index it correctly.

How to Ensure Google Can Render JavaScript Properly:

- Use Google’s Mobile-Friendly Test to check if JavaScript content is visible.

- Run the Google Search Console URL Inspection Tool to see what Googlebot renders.

- Consider Server-Side Rendering (SSR) or Pre-Rendering to speed up the process.

- Minimize JavaScript complexity to reduce processing time.

Stage 3: Indexing – Is Your JavaScript Content Getting Stored in Google’s Index?

Once Google successfully crawls and renders a JavaScript page, the next step is indexing, where Google adds the content to its search database.

However, JavaScript-heavy websites often face delays or incomplete indexing, negatively impacting search rankings.

Common JavaScript Indexing Problems:

- Delayed Indexing – Google may take days or weeks to index JavaScript content, reducing visibility.

- Missing Content – Some JavaScript-loaded elements may never get indexed, especially if they require user interactions.

- SEO Metadata Not Applied Properly – If JavaScript overrides

<title>,<meta>Tags, or canonical URLs, after rendering, Google might ignore them.

How to Ensure JavaScript Content Gets Indexed:

- Check Google’s Cached Version – Search for it

cache:yourwebsite.comto see if the rendered content matches your expectations. - Use the “View Rendered Source” Tool – Compare the initial HTML source vs. rendered content.

- Make SEO Metadata Static – Instead of relying on JavaScript to set titles and meta descriptions, include them in the original HTML.

- Avoid JavaScript-Generated Links for Navigation – Use standard

<a href>links instead of onclick JavaScript events.

Google’s Two-Wave Indexing Process for JavaScript

Googlebot doesn’t always process JavaScript immediately. Instead, it uses a two-wave indexing approach:

- First Wave (Initial Crawling) – Googlebot fetches and indexes raw HTML but may not render JavaScript immediately.

- Second Wave (Rendering Queue) – Google processes JavaScript later, rendering content and updating the index.

Why This Matters:

If your site relies heavily on JavaScript for critical content, it could take days or weeks before Google fully indexes your pages, affecting rankings.

How to Optimize for Faster Indexing:

- Use Server-Side Rendering (SSR) or Pre-Rendering to serve fully rendered content.

- Prioritize important content in static HTML (e.g., key headings, meta tags, navigation).

- Reduce JavaScript dependencies to improve load times and crawl efficiency.

In short:

Google’s ability to process JavaScript-heavy websites has improved significantly, but challenges still exist.

If your content relies on JavaScript but isn’t optimized, it may remain unindexed or delayed, leading to lower rankings and reduced organic traffic.

To ensure your JavaScript content is fully visible and indexed, you should:

- Make key content available in the initial HTML whenever possible.

- Use server-side rendering (SSR) or pre-rendering for faster indexing.

- Regularly test how Googlebot sees and processes your pages.

- Monitor indexing delays and adjust your strategy accordingly.

Featured Article: SEO Success in 2026: Metrics, Audits & Strategies for Long-Term Growth

JavaScript Rendering Methods: Which Works Best for SEO?

Rendering is crucial to JavaScript SEO because it determines how and when content becomes visible to search engines and users.

If a website’s JavaScript content isn’t rendered correctly, Google may fail to index it, leading to poor search rankings and visibility issues.

There are three main JavaScript rendering methods:

- Server-Side Rendering (SSR) – JavaScript is executed on the server before sending HTML to the browser.

- Client-Side Rendering (CSR) – JavaScript is executed in the user’s browser after the initial HTML loads.

- Dynamic Rendering (Hybrid Approach) – A combination of SSR and CSR, where a pre-rendered version is served to search engines while users get the whole JavaScript experience.

Let’s break down how each rendering method works, its impact on SEO, and the best practices for implementation.

-

Server-Side Rendering (SSR) – Best for SEO

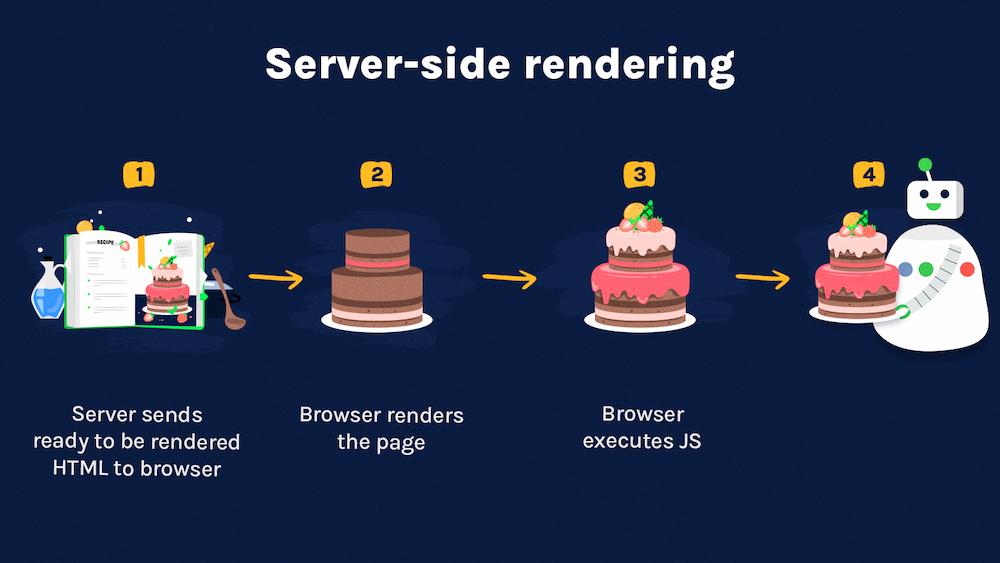

Server Side Rendering- A Visual by Onely Here’s how it works:

- The server processes JavaScript before sending the fully rendered HTML to the browser.

- Googlebot and users receive a complete, SEO-friendly page without needing extra processing.

SEO Advantages of SSR

- Fast Indexing – Since Google receives a fully rendered page, there’s no delay in indexing.

- Better Core Web Vitals – Faster load times improve Page Experience metrics, a key ranking factor.

- Improved Crawl Budget Efficiency – Googlebot doesn’t have to execute JavaScript, saving crawl resources.

Potential Drawbacks

- Higher Server Load – Rendering JavaScript on the server can increase hosting costs.

- More Complex Setup – Requires Node.js, Next.js, or Nuxt.js to manage SSR properly.

Best Practices for SSR SEO Optimization

- Use caching to reduce server load.

- Optimize server response time to avoid slow page loads.

- Implement lazy loading for non-critical JavaScript elements.

- Use Edge SEO techniques like Cloudflare Workers to improve performance.

Recommended for:

- E-commerce websites

- News and media platforms

- Web applications need real-time SEO optimization

-

Client-Side Rendering (CSR) – Risky for SEO Without Optimization

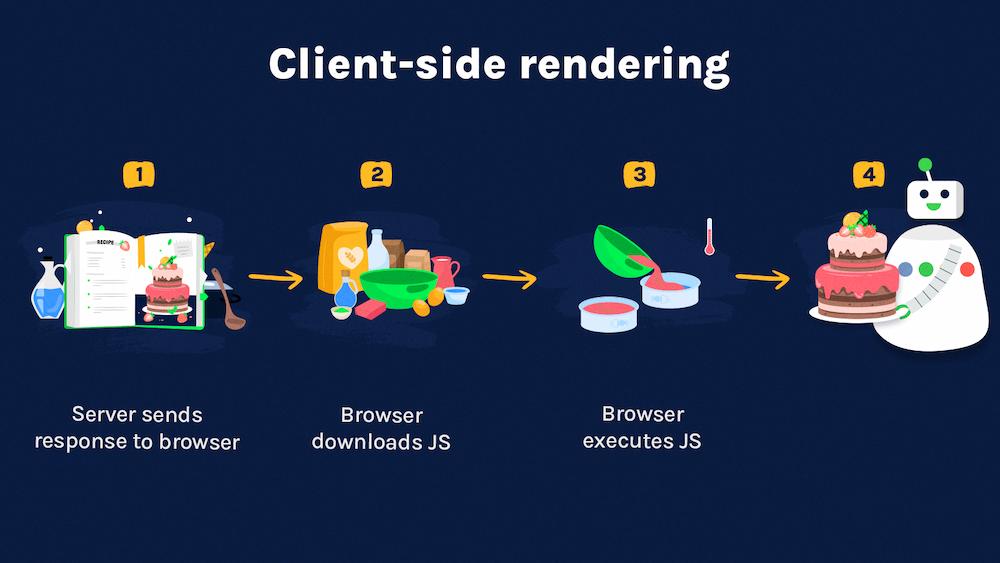

Client-Side Rendering- A Visual by Onely Here’s how it works:

- The browser receives a bare HTML shell and executes JavaScript to load content dynamically.

- Googlebot must process JavaScript separately before indexing the content.

SEO Challenges of CSR

- Delayed Indexing – Since content loads dynamically, Googlebot must return later to render it.

- High JavaScript Processing Load – Google may drop content if rendering takes too long.

- Poor Performance Scores – CSR-heavy sites often fail Core Web Vitals assessments.

How to Make CSR SEO-Friendly

- Use “Isomorphic Rendering” (Hybrid SSR & CSR) – Pages initially load with basic SSR, and additional elements are handled via CSR.

- Implement Pre-Rendering – Generate static HTML snapshots for Googlebot to ensure proper indexing.

- Ensure SEO Metadata is Present in the Initial HTML – Avoid dynamically inserting.

<title>,<meta description>And canonical tags after page load. - Use Google’s Rendering Debug Tools – Regularly test how Google sees CSR content using Google Search Console’s URL Inspection Tool.

Recommended for:

- Web applications that require interactivity

- Single Page Applications (SPAs) where user experience is a priority

- Internal dashboards that don’t need SEO optimization

-

Dynamic Rendering – The Best Hybrid Approach

Here’s how it works:

- Users receive Client-Side Rendering (CSR) for a fast, interactive experience.

- Search engines receive a pre-rendered version of the page using a rendering tool like Rendertron, Puppeteer, or Prerender.io.

Why It’s a Good SEO Solution

- It’s the best of Both Worlds: Users get fast, interactive pages, while Googlebot gets fully-rendered HTML for quick indexing.

- Solves Google’s JavaScript Indexing Delays – Content is already pre-rendered, ensuring no crawl or rendering issues.

- Supports Large-Scale Websites – Reduces server load compared to full SSR implementations.

Challenges of Dynamic Rendering

- Requires a Separate Rendering Infrastructure – Needs Node.js servers or cloud-based rendering solutions.

- May Cause Content Discrepancies – If the pre-rendered and user versions don’t match, Google may flag the content as deceptive (cloaking).

Best Practices for Implementing Dynamic Rendering

- Use Google-recommended pre-rendering solutions like Rendertron or Puppeteer.

- Set up user-agent detection to serve the correct version to search engines vs. users.

- Regularly test using Google’s Mobile-Friendly Test to ensure rendered content is indexed correctly.

Recommended for:

- Enterprise-level websites with high traffic

- E-commerce platforms with dynamic filters and content updates

- News websites need fast updates and SEO visibility

Which JavaScript Rendering Method Should You Choose?

| Rendering Method | SEO Impact | Pros | Cons | Best For |

| Server-Side Rendering (SSR) | ✅ Best for SEO | Fast indexing, no JavaScript execution required by Google, optimized for Core Web Vitals | Higher server load, complex setup | E-commerce, news, and large content sites |

| Client-Side Rendering (CSR) | ❌ Risky without optimizations | Lightweight on servers, better user interactivity | Slower indexing, Googlebot may miss content, and poor Core Web Vitals | Web apps, internal dashboards, SPAs |

| Dynamic Rendering | 🔄 Hybrid approach | Best of SSR & CSR, fast indexing, reduced server load | Requires additional infrastructure, needs careful setup to avoid cloaking | Large-scale websites, interactive content-heavy platforms |

The choice between SSR, CSR, and Dynamic Rendering depends on your website’s goals.

If SEO is your priority, Server-Side Rendering (SSR) or Dynamic Rendering is highly recommended.

To make sure your JavaScript content is fully indexed and optimized for search engines, follow these key takeaways:

- For SEO-focused websites, → Use SSR or Dynamic Rendering to ensure fast indexing.

- For interactive web apps → Optimize CSR by pre-rendering critical content and using structured data.

- Regularly test how Google sees your pages → Use Google Search Console’s URL Inspection Tool and Mobile-Friendly Test.

Featured Article: How Long Does SEO Take to Show Results? A Realistic Timeline

How to Make Your Website’s JavaScript Content SEO-Friendly

Now that we’ve covered how Google processes JavaScript and the different rendering methods, the next step is implementing best practices to ensure your JavaScript content is fully optimized for search engines.

A poorly optimized JavaScript-based website can lead to indexing delays, missing content, poor Core Web Vitals scores, and decreased rankings.

The good news? With the correct SEO strategies, you can overcome these challenges and ensure Google properly crawls, renders, and indexes your site.

Below are the most effective JavaScript SEO best practices that will help improve your website’s search visibility.

-

Use Google Search Console to Identify JavaScript Issues

Google provides several tools to help diagnose JavaScript SEO problems. The URL Inspection Tool in Google Search Console (GSC) is among the most important.

How to Use Google Search Console for JavaScript SEO

- Go to Google Search Console and enter your page URL in the URL Inspection Tool.

- Click “Test Live URL” to see what Googlebot sees.

- Check for discrepancies between the raw HTML and the rendered version of your page.

- Look for indexing issues, blocked resources, and rendering errors.

Common JavaScript SEO Issues You Might Find in GSC

- Googlebot can’t see JavaScript-loaded content – The rendered version lacks critical elements.

- Blocked JavaScript files – Your robots.txt file may prevent search engines from executing JavaScript.

- Slow loading times – Googlebot may not fully load the page if JavaScript execution is too slow.

Fix: If Google Search Console reports rendering issues, consider pre-rendering JavaScript content or switching to server-side rendering (SSR). -

Ensure Google Can Index JavaScript Content

For search engines to index JavaScript-powered websites effectively, the content must be accessible and visible in the rendered HTML.

How to Check if Google Indexes Your JavaScript Content

- Perform a “site:” search in Google (e.g.,

site:yourwebsite.com) to see what pages Google has indexed. - Search for a unique phrase from your JavaScript content in Google (e.g., “exact text from your page”) to check if it appears in search results.

- Use Google’s Cached View (

cache:yourwebsite.com) to see what version of your page Google has stored.

How to Fix JavaScript Indexing Issues

- Use Pre-Rendering – Serve a static HTML version to search engines.

- Place critical content in the initial HTML instead of relying on JavaScript to insert it.

- Avoid JavaScript-based navigation – Use standard

<a href>links instead of JavaScript-generated links. - Make sure your sitemap includes JavaScript-loaded pages to help Google discover them faster.

- Perform a “site:” search in Google (e.g.,

-

Optimize JavaScript Performance for SEO and Core Web Vitals

Google considers page experience a key ranking factor, and JavaScript-heavy websites often suffer from poor Core Web Vitals scores. Complex JavaScript files can slow down page speed, affecting user experience and rankings.

How to Improve JavaScript Performance for SEO

- Minimize JavaScript Execution Time – Reduce unnecessary scripts and optimize code efficiency.

- Defer Non-Essential JavaScript – Use the async or defer attributes to load scripts without blocking page rendering.

- Enable Browser Caching – Store JavaScript files locally on users’ devices for faster load times.

- Use Lazy Loading for Images & Non-Critical Elements – This prevents JavaScript from slowing down page load times.

- Reduce JavaScript Dependencies – The fewer external libraries and scripts, the better.

Use Google’s PageSpeed Insights to identify JavaScript-related performance issues and optimize accordingly.

-

Implement Proper Internal Linking for JavaScript Pages

JavaScript-powered websites often generate dynamic links, but search engines may not recognize them as links unless they follow standard HTML linking structures.

Best Practices for Internal Linking in JavaScript Websites

- Use HTML

<a>tags instead of JavaScript-based navigation (onclickevents). - Ensure all necessary pages are linked in your sitemap and accessible via internal links.

- Avoid using hash fragments (

#) in URLs – Google doesn’t treat them as separate pages.

- Use HTML

-

Use Structured Data for JavaScript Websites

Structured data helps search engines understand your content better and can improve click-through rates (CTR) with rich snippets.

How to Use Structured Data for JavaScript SEO

- Implement JSON-LD structured data for products, articles, and reviews.

- Use Google’s Rich Results Test to verify your structured data is valid.

- Make sure structured data is in the initial HTML to prevent rendering issues.

Fix Common JavaScript SEO Issues Before They Hurt Rankings

Below are some of the most common JavaScript SEO issues and how to fix them:

| Issue | SEO Impact | Solution |

| Google can’t see JavaScript content | Content is not indexed | Use SSR or pre-rendering |

| Blocked JavaScript files | Googlebot can’t execute JS | Allow JavaScript in robots.txt |

| Slow rendering and indexing | Delayed rankings | Optimize JavaScript and improve load speed |

JavaScript navigation instead of <a> links |

Google can’t follow links | Use standard HTML <a> tags |

| Heavy JavaScript affecting Core Web Vitals | Lower rankings | Reduce script size, lazy load non-essential elements |

Optimizing JavaScript for SEO ensures Google can fully crawl, render, and index your content.

Following these best practices can avoid common pitfalls and improve your website’s search visibility. Just remember to:

- Check how Google sees your JavaScript content using Google Search Console.

- Ensure JavaScript-rendered content is indexable by serving key elements in HTML.

- Improve page speed by optimizing JavaScript execution and using async or defer.

- Use standard internal linking instead of JavaScript-based navigation.

- Implement structured data to enhance search visibility.

Implementing these JavaScript SEO strategies can ensure faster indexing, higher rankings, and a better user experience.

Better Visibility, More Growth.

Let’s optimize your website so customers can quickly discover and connect with your brand.

Contact UsCommon JavaScript SEO Issues and How to Avoid Them

Even with proper optimization, JavaScript-powered websites often face SEO challenges, leading to indexing delays, missing content, and ranking drops.

Identifying and fixing these issues is critical to ensuring Google can adequately crawl, render, and index your site.

Below, we break down the most common JavaScript SEO issues and provide actionable solutions to fix them.

-

Google Can’t See JavaScript-Generated Content

JavaScript-heavy pages may load important content dynamically, meaning Googlebot doesn’t see it during the initial crawl.

If your page relies entirely on JavaScript to insert headings, paragraphs, or product descriptions, Google may never index that content.

How to Check:

- Use Google’s URL Inspection Tool in Google Search Console.

- Compare the raw HTML source (View Page Source) vs. the rendered version (Inspect Element).

- Perform a “site:” search (

site:yourwebsite.com“exact phrase”) to see if Google has indexed your content.

How to Fix It:

- Use Server-Side Rendering (SSR) or Pre-Rendering to deliver a fully-rendered HTML page.

- Ensure critical content is available in the initial HTML source.

- If using Client-Side Rendering (CSR), implement dynamic rendering to serve a pre-rendered version to Googlebot.

-

Google Takes Too Long to Index JavaScript Pages

JavaScript-powered websites often experience delayed indexing because Google processes JavaScript in two waves:

- First wave: Googlebot crawls the raw HTML but may not execute JavaScript immediately.

- Second wave: Googlebot renders JavaScript pages later, delaying indexing.

This delay can hurt SEO because new pages or updates may take days or weeks to appear in search results.

How to Check:

- Use Google Search Console to check indexing status and render diagnostics.

- Search for your page on Google (

site:yourwebsite.com/page-url). - Use Google’s Cached View (

cache:yourwebsite.com/page-url) to see the last indexed version.

How to Fix It:

- Use pre-rendering or SSR to ensure Google receives fully rendered content.

- Reduce JavaScript execution time by optimizing script size and removing unused code.

- Add internal links to new pages so Googlebot finds them faster.

- Submit the page in Google Search Console for manual indexing.

-

JavaScript Blocks Search Engine Crawlers

Some JavaScript frameworks block search engine crawlers from accessing key resources.

If your site’s JavaScript is disallowed in the robots.txt file, Googlebot may not render and index content properly.

How to Check:

- Use Google Search Console’s Coverage Report to see if pages are blocked.

- Look at your robots.txt file (

yourwebsite.com/robots.txt) to check for:

Disallow: /js/

Disallow: /wp-content/plugins/How to Fix It:

- Ensure JavaScript and CSS files are not blocked in

robots.txt. - Use

Fetch as GoogleIn Google Search Console to see if Google can access JavaScript files. - Avoid using

nofollowon important links that load JavaScript-generated content.

-

JavaScript-Based Navigation Prevents Google from Discovering Pages

Some websites use JavaScript to generate navigation menus or links dynamically.

Googlebot may not recognize these links, leading to poor internal linking and orphaned pages (without internal links pointing to them).

How to Check:

- Disable JavaScript in your browser (Chrome DevTools > Disable JavaScript) and see if the navigation still works.

- Use Screaming Frog SEO Spider in JavaScript rendering mode to detect missing links.

How to Fix It:

- Use standard HTML

<a href>links instead of JavaScript-based event listeners (onclick). - Ensure all pages are included in the sitemap and adequately linked.

- Add an HTML-based navigation menu alongside JavaScript-powered navigation.

-

JavaScript Causes Duplicate Content Issues

JavaScript-generated URLs (especially hash fragments

#or dynamic parameters like?filter=red) can create multiple versions of the same page, leading to duplicate content issues that confuse Google.How to Check:

- Use Google Search Console’s URL Parameters Tool to detect duplicate URLs.

- Check if multiple versions of the same page exist with different parameters (

yourwebsite.com/page,yourwebsite.com/page?variant=red).

How to Fix It:

- Use canonical tags (

<link rel="canonical">) to indicate the preferred URL version. - Avoid using hash fragments (

#) in URLs, as Google ignores them. - Configure your CMS or e-commerce platform to prevent unnecessary duplicate URLs.

-

Slow JavaScript Execution Hurts Core Web Vitals and SEO

Google prioritizes fast-loading websites as part of its Page Experience update. If your JavaScript files are too large or take too long to execute, your site’s Core Web Vitals scores may suffer, leading to lower rankings.

How to Check:

- Run Google PageSpeed Insights to analyze JavaScript execution time and performance scores.

- Use Lighthouse in Chrome DevTools to find JavaScript performance bottlenecks.

How to Fix It:

- Minimize JavaScript execution time by reducing file size and eliminating unused scripts.

- Use lazy loading to defer loading of non-essential JavaScript elements.

- Enable compression (Gzip or Brotli) to reduce JavaScript file sizes.

- Use a Content Delivery Network (CDN) to distribute JavaScript resources efficiently.

-

JavaScript Redirects Are Not Search-Friendly

Unlike traditional 301 redirects, Google may not always recognize JavaScript-based redirects. If done incorrectly, search engines might not follow the redirect and fail to pass ranking signals.

How to Check:

- Use Google’s URL Inspection Tool to check if the new URL is indexed.

- Inspect server logs to see if Googlebot is reaching the correct destination URL.

How to Fix It:

- Use server-side 301 redirects whenever possible instead of JavaScript-based redirects.

- If you must use JavaScript redirects, ensure they execute instantly and include a

<meta refresh>fallback. - Test using Google’s Mobile-Friendly Test to confirm redirection is applied correctly.

Addressing these common JavaScript SEO issues can improve your website’s indexability, load speed, and search rankings.

In short:

- Ensure Google can see, render, and index JavaScript-powered content.

- Minimize JavaScript execution time to improve Core Web Vitals and SEO performance.

- Use pre-rendering or SSR to prevent indexing delays.

- Avoid JavaScript-based navigation issues by using standard

<a>links. - Fix duplicate content problems with canonical tags and proper URL structuring.

Fixing these JavaScript SEO mistakes can significantly affect how well your site ranks in search engines.

Featured Article: The Importance of Title Tags and How to Optimize Them

Advanced JavaScript SEO Strategies to Stay Ahead

Now that we’ve covered the common pitfalls and how to fix them, it’s time to improve your JavaScript SEO.

Advanced strategies can help you stay ahead of competitors, improve search rankings, and future-proof your site against Google’s evolving algorithms.

Here are some powerful JavaScript SEO techniques to enhance performance, indexing, and ranking success.

-

Using Edge SEO for Real-Time JavaScript Optimization

Edge SEO is optimizing your website’s JavaScript and other SEO elements at the edge server level, before content reaches users or search engines.

Instead of modifying the core website code, Edge SEO allows you to deploy changes dynamically using serverless technologies like Cloudflare Workers, Fastly, or Akamai EdgeWorkers.

How Edge SEO Improves JavaScript SEO

- Faster Rendering and Indexing – Google receives a fully optimized version of the page at the edge.

- On-the-Fly Fixes – Implement meta tags, structured data, redirects, or canonical tags without modifying site code.

- SEO Testing Without Dev Delays – Deploy real-time SEO experiments without waiting for development cycles.

How to Implement Edge SEO for JavaScript Optimization

- Use Cloudflare Workers to modify JavaScript execution before content loads.

- Add dynamic metadata and structured data at the edge without touching source code.

- Rewrite URLs before they reach Googlebot, ensuring better crawlability.

- Use pre-rendering at the edge to deliver optimized HTML snapshots instantly.

Best For:

- Large-scale websites

- JavaScript-heavy web applications

- E-commerce platforms with frequent content updates

-

Cloudflare Workers and SEO Benefits

Cloudflare Workers allow you to modify, cache, and optimize JavaScript content at the network edge, improving performance and indexing.

Why Cloudflare Workers Are Great for SEO

- Faster Page Load Times – Improves Core Web Vitals by reducing server requests.

- Better Indexing – Ensures pre-rendered content is served instantly to search engines.

- Minimizes JavaScript Execution Delay – Helps avoid two-wave indexing delays.

How to Optimize JavaScript SEO with Cloudflare Workers

- Intercept and modify JavaScript responses before they reach Googlebot.

- Use edge caching to store rendered HTML versions for better indexing.

- Dynamically insert SEO-friendly elements like meta descriptions, Open Graph tags, and structured data.

-

How to Implement JavaScript Polyfills for Older Browsers

Not all browsers support modern JavaScript features. If your website uses advanced JavaScript functions, older browsers may fail to render content properly.

Why This Matters for SEO

- Googlebot sometimes uses an older Chrome version, meaning it might struggle with modern JavaScript.

- Users on older devices may not see key content, leading to poor user engagement and bounce rates.

How to Fix It with Polyfills

- Use Babel to compile modern JavaScript into older, browser-compatible versions.

- Implement polyfills to support features like

fetch(),Promise, andArray.includes(). - Check Googlebot’s user-agent version to ensure compatibility.

Best For:

- Websites using React, Vue.js, or Angular

- Ensuring Googlebot can always render JavaScript correctly

-

Leveraging Server Logs to Analyze JavaScript SEO Issues

Server logs can be a great way to analyze JavaScript SEO issues.

Why Server Logs Matter for JavaScript SEO

- They show how often Googlebot crawls JavaScript files.

- Reveal JavaScript execution errors that may block rendering.

- Help diagnose crawl budget issues caused by inefficient JavaScript loading.

How to Use Server Logs for JavaScript SEO

- Identify which JavaScript resources Googlebot fetches most frequently.

- Look for crawl errors related to blocked JavaScript files.

- Monitor Google’s request frequency to detect potential indexing delays.

-

HTTP/2, Service Workers, and SEO Impact on JavaScript

HTTP/2, service workers, and SEO have a significant impact on JavaScript. Here’s what you need to know:

Why HTTP/2 Helps JavaScript SEO

- Reduces load times by loading multiple JavaScript files simultaneously.

- Minimizes server requests, improving Core Web Vitals.

How to Enable HTTP/2 for Better SEO

- Check if your hosting provider supports HTTP/2 (most modern servers do).

- Use lazy loading and

preloadhints to prioritize critical JavaScript files.

Service Workers and SEO Considerations

- Service workers improve page speed by caching JavaScript.

- Be cautious—Google may not always index service worker-cached content.

-

Using File Versioning to Prevent Indexing of Outdated JavaScript Files

Google may index outdated JavaScript files, leading to broken functionality or missing content in search results.

The Solution: Use File Versioning

- Append version numbers to JavaScript URLs (e.g.,

script.js?v=2.1). - Ensure Google always fetches the latest version instead of an outdated cached file.

- Update JavaScript files dynamically without breaking SEO-critical elements.

- Append version numbers to JavaScript URLs (e.g.,

-

Experimenting With AI and Machine Learning for JavaScript SEO

AI-powered SEO tools can automate JavaScript performance optimizations and predict indexing issues before they happen.

How AI Enhances JavaScript SEO

- AI-based site audits detect JavaScript crawlability issues.

- Predictive indexing analysis helps forecast which pages Google may struggle to index.

- Automated script optimization improves JavaScript execution time.

Best Tools for AI-Driven JavaScript SEO:- OnCrawl – AI-powered log file analysis to detect JavaScript rendering issues.

- DeepCrawl – Uses AI to predict how Googlebot handles JavaScript.

- JetOctopus – AI-based rendering reports to spot indexing delays.

Featured Article: What Is SEO Writing? How to Write SEO-Friendly Content in 2026

Conclusion

JavaScript SEO is no longer an optional consideration—it’s a necessity for businesses that rely on JavaScript frameworks like React, Angular, and Vue.js to power their websites.

While JavaScript enables dynamic and interactive experiences, it also introduces SEO challenges that can impact indexing, rankings, and user experience.

Throughout this guide, we’ve explored how Google crawls, renders, and indexes JavaScript, the best practices for optimization, and advanced strategies that can help JavaScript-heavy websites rank competitively in search results.

The Best Time to Invest in SEO Was Yesterday. The Second Best Time is Now.

Let’s build a sustainable SEO strategy that helps your business thrive long-term.

Contact UsFAQs

1. Why is JavaScript SEO important for search rankings?

2. How can I check if Google is indexing my JavaScript content?

- Use Google Search Console’s URL Inspection Tool to see how Googlebot views your page.

- To check if the content is indexed, perform a”site:” search in Google (e.g.,

site:yourwebsite.com“unique text from page”). - Check Google’s Cached View (

cache:yourwebsite.com/page-url) to see the last stored version of the page. - Compare the raw HTML source (View Page Source) with the rendered version (Inspect Element in DevTools) to see if JavaScript content is visible.

If Google isn’t indexing your JavaScript content, consider using pre-rendering, server-side rendering (SSR), or dynamic rendering.

3. What’s the best rendering method for JavaScript SEO?

- Server-Side Rendering (SSR) – Best for SEO as it delivers fully rendered HTML to search engines, ensuring fast indexing.

- Dynamic Rendering – A hybrid approach where Google gets a pre-rendered version while users receive the interactive JavaScript experience.

- Client-Side Rendering (CSR) – Not recommended for SEO without optimizations, as it delays content rendering and can lead to indexing issues.

SSR or dynamic rendering is recommended forfaster indexing and improved rankings for SEO-driven websites.

4. How can I improve JavaScript SEO for Core Web Vitals?

- Reducing JavaScript execution time by minimizing and deferring non-essential scripts.

- Implementing lazy loading for images and JavaScript-heavy elements.

- Using a Content Delivery Network (CDN) to serve JavaScript files faster.

- Enabling HTTP/2 to improve resource loading efficiency.

- Pre-rendering critical content to ensure fast Largest Contentful Paint (LCP) times.

Optimizing JavaScript for better page speed, user experience, and indexing efficiency will help boost search rankings and engagement.